前言

最近越来越多的合作伙伴在希望尝试在K8S上去做一些工作,这些需求大多是一些 POC 或是测试,原因也很简单,因为Veeam 收购了 Kasten,这是一个专门用于K8S 备份、容灾、迁移的软件。在这里我给大家做一个比较简单示范,给K8S的初学者一些感觉,K8S 的大神们还请多多给出建议。

本文重要章节

1. K8S 的基本概念与组件

1.1 K8S 架构概览

2. 操作系统与网络环境准备

2.1 VM 环境准备

2.2 网络环境准备

2.2.1 使 node 以 NAT 方式接触 Internet,同时拥有静态IP

2.2.2 用 netplan 为 Ubutu 18.04 修改IP

2.2.3 改主机名字

3. K8S 安装与部署

3.1 K8S 安装环境准备

3.1.2 安装Docker 环境

3.2 K8S 安装

3.2.1 从阿里源安装 K8S

3.2.2 集群的网络设置

3.2.3 为集群创建配置文件

3.3 K8S 集群配置

3.3.1 初始化 K8S 集群

3.3.2 扩展 K8S 集群

3.3.3 完成 K8S 集群配置

3.3.4 配置 Master Node 污点状态

3.3.4 把 Node2 加入到 Cluster 配置完成

1. K8S 的基本概念与组件

1.1 K8S 架构概览

在开始建立 Kubernetes 集群之前,你需要了解一些与相关的基本概念和术语。本文介绍了 Kubernetes 的基本组件,这些组件将为您提供入门所需的知识。

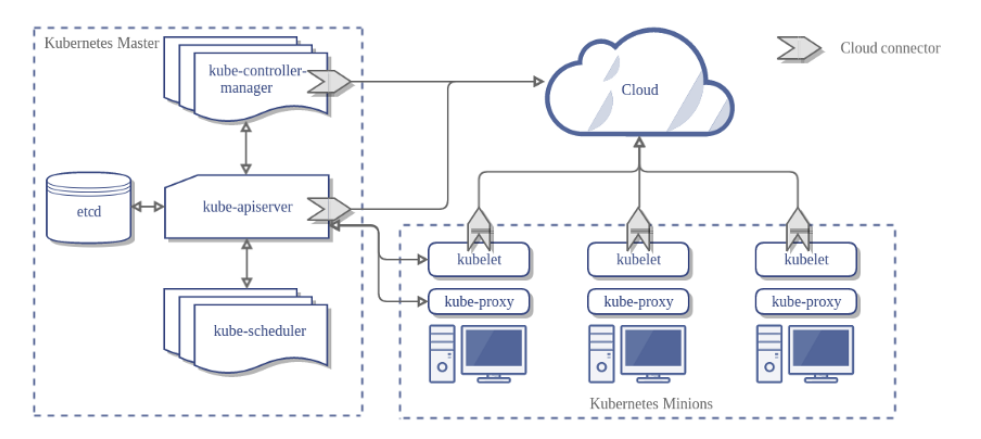

K8S 架构概览如下图,简单来说,K8S Cluster 由多个节点组成,节点是安装了Kubernetes的物理机或虚拟机。节点分为 Master Node 和 Worker Node , Node 中的 Pod 是启动容器与运行容器的地方。Cluster 的架构可以避免故障风险,分担Workload, Kubernetes cluster由一个或多个nodes组成,被 Kubernetes Master来管理。

Kubernetes主要由以下几个核心组件组成:

etcd,是管理数据库,保存了整个集群的状态;

apiserver 提供了资源操作的唯一入口,并提供认证、授权、访问控制、API注册和发现等机制;

controller manager 负责维护集群的状态,比如故障检测、自动扩展、滚动更新等;

scheduler 负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上;

kubelet 负责维护容器的生命周期,同时也负责Volume(CSI)和网络(CNI)的管理;

Container runtime负责镜像管理以及Pod和容器的真正运行(CRI);

kube-proxy负责为Service提供cluster内部的服务发现和负载均衡;

除了核心组件,还有一些推荐的插件,其中有的已经成为CNCF中的托管项目:

CoreDNS 负责为整个集群提供DNS服务

Ingress Controller 为服务提供外网入口

Prometheus 提供资源监控

Dashboard 提供GUI

Federation 提供跨可用区的集群

由于本文是一个初学者旅程文档,并不打算介绍太多的理论。如果读者有相关的需求,请到 Kubernates 官方文档上进行查询。

2. 操作系统与网络环境准备

2.1 VM 环境准备

我们将在VMware Worksation 中做准备三台主机,用这三台主机做成 K8S Cluster 方便我们今后的操作。在这里,我们需要3台主机,分别作为 K8S的 Master Node 和 Worker Node,规划如下。

| node | ip |

|---|---|

| k8smaster | 172.16.60.60 |

| k8snode1 | 172.16.60.61 |

| k8snode2 | 172.16.60.62 |

2.2 网络环境准备

为了能让读者方便快速的按照本文档,构建出自己的k8s,在这里呢我们把网络设置的一些经验分享给大家,希望能有所帮助。

2.2.1 使 node 以 NAT 方式接触 Internet,同时拥有静态IP

sudo vim /Library/Preferences/VMware\ Fusion/vmnet8/dhcpd.conf你会看到如下的配置文件, 注意 Range和option routers, 这将是可以用到的IP地址段和网关

# Written at: 10/27/2019 10:49:26

allow unknown-clients;

default-lease-time 1800; # default is 30 minutes

max-lease-time 7200; # default is 2 hours

subnet 172.16.60.0 netmask 255.255.255.0 {

range 172.16.60.128 172.16.60.254;

option broadcast-address 172.16.60.255;

option domain-name-servers 172.16.60.2;

option domain-name localdomain;

default-lease-time 1800; # default is 30 minutes

max-lease-time 7200; # default is 2 hours

option netbios-name-servers 172.16.60.2;

option routers 172.16.60.2;

}

host vmnet8 {

hardware ethernet 00:50:56:C0:00:08;

fixed-address 172.16.60.1;

option domain-name-servers 0.0.0.0;

option domain-name "";

option routers 0.0.0.0;

}

####### VMNET DHCP Configuration. End of "DO NOT MODIFY SECTION" #######

2.2.2 用 netplan 为 Ubutu 18.04 修改IP

Ubutu 18.04 下如何改IP呢,您要用到netplan

cd /etc/netplan 下,你可以发现一个 叫 50-cloud-init.yaml 的文件, 改成如下这个样子。

network:

ethernets:

ens33:

addresses:

- 172.16.60.60/24

dhcp4: false

gateway4: 172.16.60.2

nameservers:

addresses:

- 8.8.8.8之后,执行以下两个命令

sudo netplan generate

sudo netplan apply2.2.3 改主机名字

hostnamectl set-hostname k8smaster

sudo nano /etc/hosts

sudo reboot3. K8S 安装与部署

3.1 K8S 安装环境准备

为了确保一切都是最新的,在这里您需要转成跟用户并更新和升级系统。

sudo -i #成为根用户

apt-get update && apt-get upgrade -y #并更新和升级系统。3.1.2 安装Docker 环境

由于 Docker 版本的不同,在这里我们将指定版本docker的安装方法进行陈述。

1. 安装Docker组件

apt-get install -y docker.io

docker --version2. 禁用 swap

swapoff -a/etc/fstab里包含swap 那一行注释掉

3. 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld4. 禁用selinux

apt install selinux-utils

setenforce 05. reload deamon

systemctl daemon-reload

systemctl restart docker3.2 K8S 安装

由于大家所熟知的原因

我们所面对的网络环境和 k8s 所需要的安装源,并不是完全相通的

没有上网条件的同学可能需要,利用阿里或者中科源

在这里呢,我们会将经验分享给大家,原因我们不再赘述

3.2.1 从阿里源安装 K8S

如果安装时要指定版本

root@lfs458-node-1a0a:~# apt-get install -y \ kubeadm=1.15.1-00 kubelet=1.15.1-00 kubectl=1.15.1-00

https://developer.aliyun.com/mirror/kubernetes

apt-get update && apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

apt-get install -y kubelet kubeadm kubectl

3.2.2 集群的网络设置

在决定将哪个 pod 网络用于容器联网接口(CNI)时,应该考虑到集群的预期需求。每个集群只能有一个 pod 网络,尽管 CNI-Genie 项目正在尝试改变这一点。网络必须允许容器对容器、点对点、点对服务和外部对服务的通信。

在重新 Review 这篇文档时,Service Mesh的 项目Istio 已经很火了,这个项目解决了上述问题,希望今后有机会给大家分享相关的内容

由于Docker使用 主机-私有网络,使用 docker0 虚拟网桥 和 veth 接口需要在该主机上进行通信。我们将使用 Calico 作为网络插件,这将允许我们在以后的课程中使用网络策略。

目前 Calico 默认情况下不使用 CNI 进行部署。Calico的3.3版有不止一个配置文件,可以灵活地使用 RBAC。下载 Calico 和 RBAC 的配置文件。下载后,在配置文件中查找容器使用的预期IPV4范围。

注意: calico.yaml 文件是有更新,使用最新的文件才不会出问题

wget https://tinyurl.com/yb4xturm -O rbac-kdd.yaml

wget http://docs.projectcalico.org/v3.10/manifests/calico.yaml

less calico.yaml需要的话,可以更改 CALICO_IPV4POOL_CIDR

# The default IPv4 pool to create on startup if none exists. Pod IPs will be # chosen from this range. Changing this value after installation will have # no effect. This should fall within `--cluster-cidr`.

# 619 行,这是K8s Cluster的 CIDR

- name: CALICO_IPV4POOL_CIDR

value: "192.168.0.0/16"# 查看主机的ip 地址

ip addr show

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:f1:32:2e brd ff:ff:ff:ff:ff:ff

inet 172.16.60.60/24 brd 172.16.60.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fef1:322e/64 scope link

valid_lft forever preferred_lft forever

vim /etc/hosts

172.16.60.60 k8smaster #<-- Add this line

127.0.0.1 localhost

3.2.3 为集群创建配置文件

参考以下操作,为集群创建一个配置文件

# 执行如下命令

vim kubeadm-config.yamlapiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: 1.18.2 #<--这里是新版本 Use the word stable for newest version

controlPlaneEndpoint: "k8smaster:6443"

networking:

podSubnet: 192.168.0.0/16 #<-- Match the IP range from the Calico config file因为要从 k8s.gcr.io 拉取安装包,有些网络访问会有问题。

所以会建立cluster时会报错。

这里我们可以从阿里云,手动 pull 这些包,然后重新打 Tag, 再执行此步骤做初始化,就可以顺利进行了。

#拉包

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.18.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.18.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.18.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.18.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.7

# 写Tag

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.18.2 k8s.gcr.io/kube-apiserver:v1.18.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.18.2 k8s.gcr.io/kube-controller-manager:v1.18.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.18.2 k8s.gcr.io/kube-scheduler:v1.18.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.18.2 k8s.gcr.io/kube-proxy:v1.18.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2 k8s.gcr.io/pause:3.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0 k8s.gcr.io/etcd:3.4.3-0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.7 k8s.gcr.io/coredns:1.6.7

3.3 K8S 集群配置

3.3.1 初始化 K8S 集群

kubeadm init --config=kubeadm-config.yaml --upload-certs| tee kubeadm-init.out

##注意观察下面的提示

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join k8smaster:6443 --token 9co8k2.oe0rjmjeuxmqyk0e \

--discovery-token-ca-cert-hash sha256:2580ffcac4da926af28e9302b7c515c10036db59d0d0750e92b0291d6ff5f1ab \

--control-plane --certificate-key f391d9940780c846942bd8d5dd9539666b975afdae70f32d1a00e1585f49ee88

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join k8smaster:6443 --token 9co8k2.oe0rjmjeuxmqyk0e \

--discovery-token-ca-cert-hash sha256:2580ffcac4da926af28e9302b7c515c10036db59d0d0750e92b0291d6ff5f1ab

退出 root 登录状态。

正如前面输出末尾的说明所建议的

我们将允许非根用户管理员级别访问集群。

复制并修复权限之后,快速查看一下配置文件。

mars@k8smaster:~# exit

mars@k8smaster:~$ mkdir -p $HOME/.kube

mars@k8smaster:~$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

mars@k8smaster:~$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

mars@k8smaster:~$ less .kube/config将网络插件配置应用到集群

将网络插件配置应用到您的集群,注意:要先将文件复制到当前的非根用户目录。

mars@k8smaster:~$ sudo cp /root/rbac-kdd.yaml .

mars@k8smaster:~$ kubectl apply -f rbac-kdd.yaml

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

mars@k8smaster:~$ sudo cp /root/calico.yaml .

mars@k8smaster:~$ kubectl apply -f calico.yaml

configmap/calico-config configured

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org unchanged

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org unchanged

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org unchanged

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org unchanged

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org unchanged

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org unchanged

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org unchanged

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org unchanged

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org unchanged

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node configured

clusterrolebinding.rbac.authorization.k8s.io/calico-node configured

daemonset.apps/calico-node created

serviceaccount/calico-node unchanged

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

后续任务

- 加上自动命令补全

mars@k8smaster:~$ source <(kubectl completion bash) mars@k8smaster:~$ echo "source <(kubectl completion bash)" >> ~/.bashrc

到这里您已经建立K8S的 Master Node 可以试一试 kubectl 命令了,这命令是我们今后最多用到的。

- 试试 kubectl 命令

mars@k8smaster:~$ kubectl describe nodes k8smaster Name: k8smaster Roles: master Labels: beta.kubernetes.io/arch=amd64 beta.kubernetes.io/os=linux kubernetes.io/arch=amd64 kubernetes.io/hostname=k8smaster kubernetes.io/os=linux node-role.kubernetes.io/master= Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock node.alpha.kubernetes.io/ttl: 0 projectcalico.org/IPv4Address: 172.16.60.60/24 projectcalico.org/IPv4IPIPTunnelAddr: 192.168.16.128 volumes.kubernetes.io/controller-managed- ....

3.3.2 扩展 K8S 集群

- 配置 Worker Node 节点

打开另一个终端并连接到第二个节点。安装 Docker 和 Kubernetes软件。 重复我们在主节点上执行的许多步骤,注意不是全部。

root@k8snode1:~# sudo -i

root@k8snode1:~# apt-get update && apt-get upgrade -y

root@k8snode1:~# apt-get install -y docker.io

root@k8snode1:~# docker --version

root@k8snode1:~# swapoff -a

root@k8snode1:~# systemctl stop firewalld

root@k8snode1:~# systemctl disable firewalld

root@k8snode1:~# apt install selinux-utils

root@k8snode1:~# setenforce 0

root@k8snode1:~# systemctl daemon-reload

root@k8snode1:~# systemctl restart docker

root@k8snode1:~# apt-get update && apt-get install -y apt-transport-https

root@k8snode1:~# curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

root@k8snode1:~# cat <<EOF >/etc/apt/sources.list.d/kubernetes.list deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

root@k8snode1:~# apt-get update

root@k8snode1:~# apt-get install -y kubelet kubeadm kubectl- 到 Master 节点操作

- 获取IP >> 用于加入到 worker node 的 /etc/hosts 文件

- 获取 token >> 用于 worker node join Cluster

#获取IP

root@k8smaster:~# ip addr show ens33 |grep inet

inet 172.16.60.60/24 brd 172.16.60.255 scope global ens33

inet6 fe80::20c:29ff:fef1:322e/64 scope link

#获取 token

mars@k8smaster:~$ sudo kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

9co8k2.oe0rjmjeuxmqyk0e 18h 2020-05-21T09:52:30Z authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

mars@k8smaster:~$ openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

2580ffcac4da926af28e9302b7c515c10036db59d0d0750e92b0291d6ff5f1ab- 回到 Worker Node 节点操作

- 将 Master node IP 加入到 worker node 的 /etc/hosts 文件

- Join Cluster with the token

#将 Master node IP 加入到 worker node 的 /etc/hosts 文件

root@k8snode1:~# vim /etc/hosts

172.16.60.61 k8snode1

172.16.60.60 k8smaster

127.0.0.1 localhost

127.0.1.1 k8snode1

#Join Cluster with the token

root@k8snode1:~# kubeadm join --token 9co8k2.oe0rjmjeuxmqyk0e k8smaster:6443 --discovery-token-ca-cert-hash sha256:2580ffcac4da926af28e9302b7c515c10036db59d0d0750e92b0291d6ff5f1ab

W0520 22:04:03.503569 103170 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.注意:如果这个时候,你从第二个节点进行kubectl get node,你会发现是没有权限的,原因是第二节点中没有.kube/config file.

因此我们应该回到Master Node上进行Node 查看root@k8snode1:~# exit logout root@k8snode1:~# kubectl get node error: no configuration has been provided, try setting KUBERNETES_MASTER environment variable root@k8snode1:~# root@k8snode1:~# root@k8snode1:~# ls -l .kube ls: cannot access '.kube': No such file or directory3.3.3 完成 K8S 集群配置

现在,到 master node 执行 kubectl get node 命令, 您将发现两个 nodes出现在这里。

mars@k8smaster:~$ kubectl get node

NAME STATUS ROLES AGE VERSION

k8smaster NotReady master 12h v1.18.2

k8snode1 NotReady <none> 7m36s v1.18.2查看节点的详细信息,以查看资源及其当前状态。

注意污点的状态,出于安全考虑,默认情况下,主节点是不允许非内部 POD出现的。

'#

mars@k8smaster:~$ kubectl describe node k8smaster

Name: k8smaster

Roles: master

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=k8smaster

kubernetes.io/os=linux

node-role.kubernetes.io/master=

Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

projectcalico.org/IPv4Address: 172.16.60.60/24

projectcalico.org/IPv4IPIPTunnelAddr: 192.168.16.128

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Wed, 20 May 2020 09:52:27 +0000

Taints: node.kubernetes.io/not-ready:NoExecute

node.kubernetes.io/not-ready:NoSchedule

Unschedulable: false

Lease:

HolderIdentity: k8smaster

AcquireTime: <unset>

RenewTime: Wed, 20 May 2020 22:21:24 +0000

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

NetworkUnavailable False Wed, 20 May 2020 12:07:49 +0000 Wed, 20 May 2020 12:07:49 +0000 CalicoIsUp Calico is running on this node

MemoryPressure False Wed, 20 May 2020 22:21:16 +0000 Wed, 20 May 2020 09:52:19 +0000 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Wed, 20 May 2020 22:21:16 +0000 Wed, 20 May 2020 09:52:19 +0000 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Wed, 20 May 2020 22:21:16 +0000 Wed, 20 May 2020 09:52:19 +0000 KubeletHasSufficientPID kubelet has sufficient PID available

Ready False Wed, 20 May 2020 22:21:16 +0000 Wed, 20 May 2020 15:42:41 +0000 KubeletNotReady [container runtime is down, PLEG is not healthy: pleg was last seen active 6h38m44.309847066s ago; threshold is 3m0s, Container runtime not ready: RuntimeReady=false reason:DockerDaemonNotReady message:docker: failed to get docker version: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?]

Addresses:

InternalIP: 172.16.60.60

Hostname: k8smaster

Capacity:

cpu: 2

ephemeral-storage: 20508240Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 4015880Ki

pods: 110

Allocatable:

cpu: 2

ephemeral-storage: 18900393953

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3913480Ki

pods: 110

System Info:

Machine ID: 40d7883ef502417da80fafbb691b0111

System UUID: 7F024D56-0B9F-5F74-71AD-36F9EEF1322E

Boot ID: 8dee0fc3-dd26-4fce-8f56-89866224cc1c

Kernel Version: 4.15.0-101-generic

OS Image: Ubuntu 18.04.4 LTS

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://Unknown

Kubelet Version: v1.18.2

Kube-Proxy Version: v1.18.2

PodCIDR: 192.168.0.0/24

PodCIDRs: 192.168.0.0/24

Non-terminated Pods: (9 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

kube-system calico-kube-controllers-dc4469c7f-2pvnl 0 (0%) 0 (0%) 0 (0%) 0 (0%) 10h

kube-system calico-node-jdtlc 250m (12%) 0 (0%) 0 (0%) 0 (0%) 10h

kube-system coredns-66bff467f8-bh2dx 100m (5%) 0 (0%) 70Mi (1%) 170Mi (4%) 12h

kube-system coredns-66bff467f8-x9jg7 100m (5%) 0 (0%) 70Mi (1%) 170Mi (4%) 12h

kube-system etcd-k8smaster 0 (0%) 0 (0%) 0 (0%) 0 (0%) 12h

kube-system kube-apiserver-k8smaster 250m (12%) 0 (0%) 0 (0%) 0 (0%) 12h

kube-system kube-controller-manager-k8smaster 200m (10%) 0 (0%) 0 (0%) 0 (0%) 12h

kube-system kube-proxy-w2pbs 0 (0%) 0 (0%) 0 (0%) 0 (0%) 12h

kube-system kube-scheduler-k8smaster 100m (5%) 0 (0%) 0 (0%) 0 (0%) 12h

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 1 (50%) 0 (0%)

memory 140Mi (3%) 340Mi (8%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning ContainerGCFailed 38m (x361 over 6h38m) kubelet, k8smaster rpc error: code = Unknown desc = Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

Warning ImageGCFailed 3m40s (x79 over 6h33m) kubelet, k8smaster failed to get image stats: rpc error: code = Unknown desc = Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?3.3.4 配置 Master Node 污点状态

查看节点的详细信息,以查看资源及其当前状态。注意污点的状态

出于安全考虑,默认情况下,主服务器不允许非内部POD。

由于是实验环境,在这里我们设置 Master Node 污点状态为,允许主服务器运行非基础架构容器

mars@k8smaster:~$ kubectl describe node | grep -i taint

Taints: node.kubernetes.io/not-ready:NoExecute

Taints: node.kubernetes.io/not-ready:NoSchedule当我们允许主服务器运行非基础架构容器,即主节点可以接受污染。

由于我们是在训练环境中使用该节点,所以进行此项设置。

但是在生产环境中可以跳过此步骤,请注意最后的减号(-),这是删除污点的语法。

由于第二个节点没有污点,您将收到未找到的错误。

mars@k8smaster:~$ kubectl taint nodes --all node-role.kubernetes.io/master-

node/k8smaster untainted

error: taint "node-role.kubernetes.io/master" not found现在,主节点能够执行任何Pod,我们可能会发现有一个新的污点。 此行为从v1.12.0开始,要求启用新添加的节点。 查看然后移除污点(如果有)。 调度程序可能需要一两分钟才能部署其余的Pod。

mars@k8smaster:~$ kubectl describe node | grep -i taint

Taints: node.kubernetes.io/not-ready:NoExecute

Taints: node.kubernetes.io/not-ready:NoSchedule

mars@k8smaster:~$ kubectl taint nodes --all node.kubernetes.io/not-ready-

node/k8smaster untainted

node/k8snode1 untainted

Determine if the DNS and Calico pods are ready for use. They should all show a status of Running. It may take a minute or two to transition from Pending.

kubectl get pods --all-namespaces注意:-o 查看pod运行在那个node上,--namespace=kube-system查看系统pod

mars@k8smaster:~$ kubectl get po --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default nginx-f89759699-fmh2h 1/1 Running 0 5h34m 192.168.249.1 k8snode1 <none> <none>

kube-system calico-kube-controllers-dc4469c7f-7tf7x 1/1 Running 0 2d5h 192.168.16.132 k8smaster <none> <none>

kube-system calico-node-jdtlc 1/1 Running 1 2d9h 172.16.60.60 k8smaster <none> <none>

kube-system calico-node-w6mpp 1/1 Running 0 47h 172.16.60.61 k8snode1 <none> <none>

kube-system coredns-66bff467f8-f7w84 1/1 Running 0 2d5h 192.168.16.134 k8smaster <none> <none>

kube-system coredns-66bff467f8-hlgqx 1/1 Running 0 2d5h 192.168.16.133 k8smaster <none> <none>

kube-system etcd-k8smaster 1/1 Running 1 2d11h 172.16.60.60 k8smaster <none> <none>

kube-system kube-apiserver-k8smaster 1/1 Running 1 2d11h 172.16.60.60 k8smaster <none> <none>

kube-system kube-controller-manager-k8smaster 1/1 Running 1 2d11h 172.16.60.60 k8smaster <none> <none>

kube-system kube-proxy-htgpg 1/1 Running 1 47h 172.16.60.61 k8snode1 <none> <none>

kube-system kube-proxy-w2pbs 1/1 Running 1 2d11h 172.16.60.60 k8smaster <none> <none>

kube-system kube-scheduler-k8smaster 1/1 Running 1 2d11h 172.16.60.60 k8smaster <none> <none>

3.3.4 把 Node2 加入到 Cluster 配置完成

使用同样的方法把 Node2 加入到 Cluster,K8S初步配置完成

mars@k8smaster:~$ kubectl get node

NAME STATUS ROLES AGE VERSION

k8smaster Ready master 12h v1.18.2

k8snode1 Ready <none> 7m36s v1.18.2

k8snode2 Ready <none> 2m12s v1.18.2