Kasten 精英训练营教材升级 - 加入基于CSI快照的备份操作

前言

创新与迭代是 Veeam 的核心价值观之一,我们近日又对 Kasten 精英训练营的教材进行了升级, 在新升级的教材中有以下更新。

- 升级 Ubuntu 到 22.04,继利用4C,8G,200G 这个单个虚拟机配置完成实验

- 使用 External Hostpath CSI 和 External Snapshotter

- Kasten K10 升级到 5.0.11

- 利用 Kasten 调用 Hostpath CSI 和 快照进行备份

本文主要内容

[toc]

作者观察 -- 来自 KubeCon 的消息

近日 KubeCon + CloudNativeCon 2022 在底特律的密歇根市如期进行,做为钻石级赞助商的 Kasten by Veeam 在 KubeCon 宣布推出全新的 Kasten by Veeam K10 V5.5,这将包括一系列新的功能。

Kasten K10 V5.5 的新功能包括:

-

智能策略

Kasten K10 具有智能决策功能,可大规模简化数据保护流程。用户可以依策略建议在非高峰时间指定备份时间窗口。Kasten K10 不仅会灵活的建议备份时间窗口,还会进一步自动化底层备份作业的排序。这优化了底层基础设施的利用率,并可以在多个策略时并发时自动处理冲突。 -

提高部署和扩展的便利性

Kasten K10 通过提供直观的图形向导来生成最合适的安装清单,以进一步简化了部署环节。Kasten K10 5.5 还支持IPv6,支持 Amazon EKS 与 IPv6 Pod 间通信和 GitOps 工作流集成,提供可扩展的工作流以实现高效的应用程序部署以及备份/恢复。 -

扩展的云原生生态系统

确保客户能够在不断增加的工作负载类型、地理区域、存储类型和安全性方面获得最新的进步。Kasten K10 现在支持 RedHat OpenShift 虚拟化,使您能够在 Red Hat OpenShift 上并行运行和管理 VM 和容器工作负载。此外,Kasten K10 增加了对 OCP 4.10、Kubernetes 1.23、Azure 文件服务作为备份目标和 Azure 托管身份的支持,以及 AWS 和 GCP 更多区域的支持。

Kasten by Veeam Announces NEW Kasten K10 V5.5 to Simplify Kubernetes Data Protection at Scale with Autonomous Operations and Cloud Native Expansion

https://www.veeam.com/news/kasten-by-veeam-announces-new-kasten-k10-v5-5-to-simplify-kubernetes-data-protection-at-scale-with-autonomous-operations-and-cloud-native-expansion.html

kubeCon + CloudnativeCon 大会详情

https://events.linuxfoundation.org/kubecon-cloudnativecon-north-america/

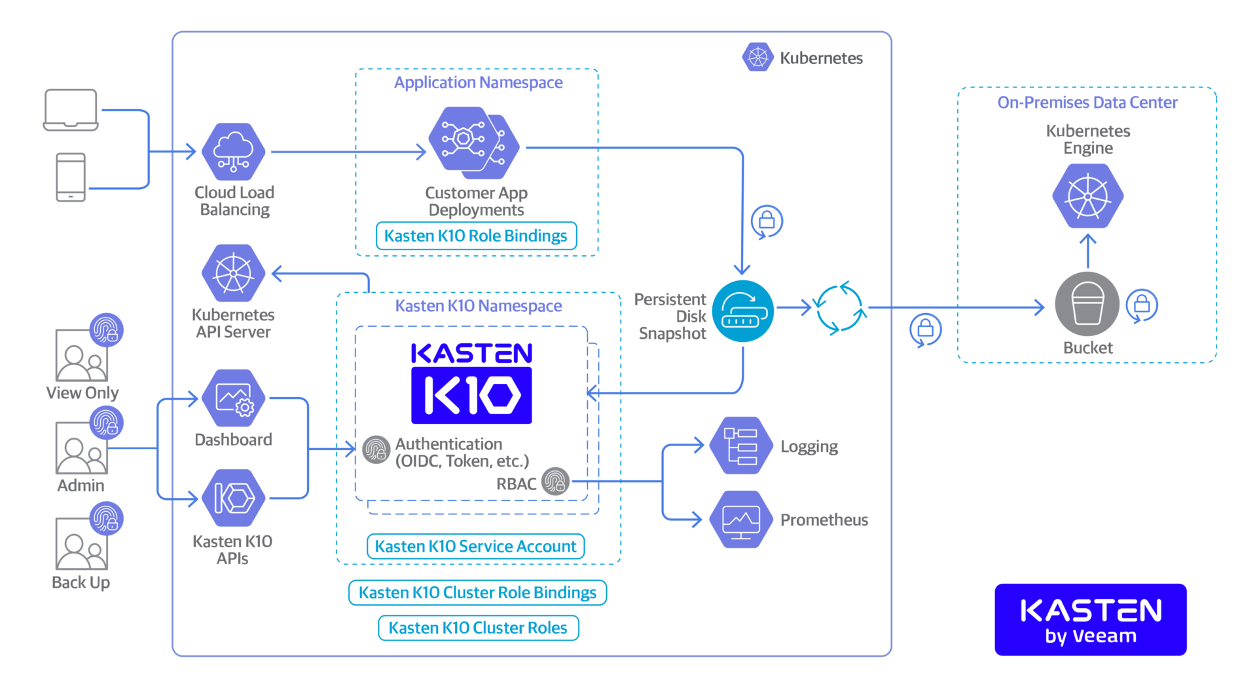

1. Kasten K10 实验环境准备

1.1. 配置虚拟机

- 虚拟机配置

4C 8G 200G HDD, IP: NAT + Static IP, User: Supervisors User and Root(Option) -

操作系统

Ubuntu 20.04 LTS or Ubuntu 22.04 LTS 为了实验方便,Desktop 版本就可以使用。可使用以下链接进行下载。

1.2. 虚拟机系统环境准备

在安装了操作系统之后,我们要对虚拟机进行一系列软件包的安装

#主机配置,option 自己了解环境就好

$ sudo apt install systemd

#hostname config

$ hostnamectl set-hostname mars-k8s1 $hostnamectl

$ sudo apt install vim

# 多节点时用 (Option)

$ sudo vim /etc/hosts

172.16.124.70 mars-k8s-master1

172.16.124.71 mars-k8s-worker1

# 软件配置,option 自己了解环境就好

$ sudo apt-get install openssh-server

$ sudo apt-get install -y apt-transport-https ca-certificates \

curl gnupg lsb-release

$ sudo apt-get update

# 安装 helm

$ sudo snap install helm --classic1.3. Docker安装环境准备

#Docker 环境清理

$ sudo apt-get remove -y docker docker-engine \ docker.io containerd runc

#关闭 Swap

$ sudo swapoff -a

$ sudo sed -i -e '/swap/d' /etc/fstab $ swapon --show

#安装Docker 环境

$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg \ | sudo gpg --dearmor \

-o /usr/share/keyrings/docker-archive-keyring.gpg

$ echo \

"deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu

$(lsb_release -cs) stable" \

| sudo tee /etc/apt/sources.list.d/docker.list

$ sudo apt-get update

$ sudo apt-get install -y docker-ce docker-ce-cli containerd.io

$ sudo mkdir -p /etc/docker

$ cat <<EOF | sudo tee /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file",

"log-opts": {"max-size": "100m"}, "storage-driver": "overlay2"

} EOF

# Docker 开机启动,赋权

$ sudo systemctl restart docker

$ sudo systemctl enable docker

$ sudo usermod -aG docker $USER1.4. Kubernetes安装与配置

#kubernetes Install

$ curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

$ sudo tee /etc/apt/sources.list.d/kubernetes.list <<EOF

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

$ sudo apt-get update

$ sudo apt-get install -y kubeadm=1.23.1-00 kubectl=1.23.1-00 kubelet=1.23.1-00

#kubernetes 开机启动

$ sudo systemctl enable kubelet

$ sudo -i

# 以下带♯命令需要在Root权限下运行

♯ systemctl enable kubelet

♯ systemctl stop etcd

♯ rm -rf /var/lib/etcd

# kubernetes 集群初始化

♯ kubeadm init --kubernetes-version=1.23.1 --pod-network-cidr 10.16.0.0/16 --image-repository registry.aliyuncs.com/google_containers

♯ exit

# 设置kubeconfig

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config $ sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 查看 Node 状态

$ kubectl get node

# 去除污点

$ kubectl taint nodes --all node-role.kubernetes.io/master-1.5. 配置 Kubernetes网络

#安装 CNI

$ kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

#查看 Calico 服务启动的情况

$ kubectl get pod -n kube-system -w

#注意 CNI 在这里是 Calico 是 CoreDNS 启动的前题条件,Pull Image 有时需要等待和自查。

$ kubectl get po -n kube-system

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-54965c7ccb-w944s 1/1 Running 0 40m

kube-system calico-node-rzrww 1/1 Running 0 39m

kube-system coredns-6d8c4cb4d-5gz58 1/1 Running 0 39m

kube-system coredns-6d8c4cb4d-kl28q 1/1 Running 0 39m

kube-system etcd-mars-k8s-1 1/1 Running 2 (32m ago) 8h

kube-system kube-apiserver-mars-k8s-1 1/1 Running 2 (32m ago) 8h

kube-system kube-controller-manager-mars-k8s-1 1/1 Running 2 (32m ago) 8h

kube-system kube-proxy-k78cr 1/1 Running 2 (32m ago) 8h

kube-system kube-scheduler-mars-k8s-1 1/1 Running 3 (32m ago) 8h1.6.安装配置存储与快照组件 StorageClass 和 VolumeSnapshotClass

HostPath 是一种云原生存储类型,它使用的卷是指计划运行 Pod 的节点(虚拟机/计算机)上的目录。我们将为 Kubernetes 集群启用 HostPath 并测试 VolumeSnapshot 功能,这是 Kasten k10 运行的先决条件。请按照以下步骤安装和验证主机路径 CSI 驱动程序:

1.6.1 安装存储类与快照类

安装 volumesnapshot, volumesnapshotcontent, volumesnapshotclass crds

# Create snapshot controller CRDs

$ kubectl apply -f https://github.com/kubernetes-csi/external-snapshotter/raw/v5.0.1/client/config/crd/snapshot.storage.k8s.io_volumesnapshotclasses.yaml

$ kubectl apply -f https://github.com/kubernetes-csi/external-snapshotter/raw/v5.0.1/client/config/crd/snapshot.storage.k8s.io_volumesnapshotcontents.yaml

$ kubectl apply -f https://github.com/kubernetes-csi/external-snapshotter/raw/v5.0.1/client/config/crd/snapshot.storage.k8s.io_volumesnapshots.yaml

# Create snapshot controller

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/${SNAPSHOTTER_VERSION}/deploy/kubernetes/snapshot-controller/rbac-snapshot-controller.yaml

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/${SNAPSHOTTER_VERSION}/deploy/kubernetes/snapshot-controller/setup-snapshot-controller.yamlExternal snapshot CRDs and Controller 参考链接

external snapshotter controller

external snapshotter crd

1.6.2 配置 CSI-Driver 部署脚本

$ git clone https://github.com/kubernetes-csi/csi-driver-host-path.git

$ cd csi-driver-host-path/deploy/kubernetes-1.XX

$ ./deploy.sh

# 查看运行情况

$kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default csi-hostpath-socat-0 1/1 Running 0 4m47s

default csi-hostpathplugin-0 8/8 Running 0 4m47s

kube-system calico-kube-controllers-54965c7ccb-w944s 1/1 Running 0 5h46m

kube-system calico-node-rzrww 1/1 Running 0 5h46m

kube-system coredns-6d8c4cb4d-5gz58 1/1 Running 0 5h45m

kube-system coredns-6d8c4cb4d-kl28q 1/1 Running 0 5h46m

kube-system etcd-mars-k8s-1 1/1 Running 2 (5h39m ago) 13h

# csi-hostpathplugin 包括 8 个 容器,看名字我们可以大体知道它们的作用

$ kubectl describe po csi-hostpathplugin-0 |grep Image:

Image: registry.k8s.io/sig-storage/hostpathplugin:v1.9.0

Image: registry.k8s.io/sig-storage/csi-external-health-monitor-controller:v0.6.0

Image: registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.5.1

Image: registry.k8s.io/sig-storage/livenessprobe:v2.7.0

Image: registry.k8s.io/sig-storage/csi-attacher:v3.5.0

Image: registry.k8s.io/sig-storage/csi-provisioner:v3.2.1

Image: registry.k8s.io/sig-storage/csi-resizer:v1.5.0

Image: registry.k8s.io/sig-storage/csi-snapshotter:v6.0.11.6.3 查看与配置 storageclass 与 snapshotclass

# 存储类

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

csi-hostpath-sc (default) hostpath.csi.k8s.io Retain Immediate false 3s

# 快照类

$ kubectl get volumesnapshotclasses

NAME DRIVER DELETIONPOLICY AGE

csi-hostpath-snapclass hostpath.csi.k8s.io Delete 31m

# 标记存储类给K10

$ kubectl annotate volumesnapshotclass csi-hostpath-snapclass k10.kasten.io/is-snapshot-class=true

volumesnapshotclass.snapshot.storage.k8s.io/csi-hostpath-snapclass annotated

# 为存储类标记 snapshotclass

$ kubectl annotate storageclass csi-hostpath-sc k10.kasten.io/volume-snapshot-class=csi-hostpath-snapclass

1.6.4. 用 Kasten Pre-Flight Checks 脚本验证存储类与快照是否工作正常

$ curl https://docs.kasten.io/tools/k10_primer.sh | bash

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 7113 100 7113 0 0 12513 0 --:--:-- --:--:-- --:--:-- 12522

Namespace option not pbashrovided, using default namespace

Checking for tools

--> Found kubectl

--> Found helm

Checking if the Kasten Helm repo is present

--> The Kasten Helm repo was found

Checking for required Helm version (>= v3.0.0)

--> No Tiller needed with Helm v3.7.0

K10Primer image

--> Using Image (gcr.io/kasten-images/k10tools:5.0.11) to run test

Checking access to the Kubernetes context kubernetes-admin@kubernetes

--> Able to access the default Kubernetes namespace

K10 Kanister tools image

--> Using Kanister tools image (ghcr.io/kanisterio/kanister-tools:0.83.0) to run test

Running K10Primer Job in cluster with command-

./k10tools primer

serviceaccount/k10-primer created

clusterrolebinding.rbac.authorization.k8s.io/k10-primer created

job.batch/k10primer created

Waiting for pod k10primer-lfc5l to be ready - ContainerCreating

Waiting for pod k10primer-lfc5l to be ready - ContainerCreating

Pod Ready!

Kubernetes Version Check:

Valid kubernetes version (v1.23.1) - OK

RBAC Check:

Kubernetes RBAC is enabled - OK

Aggregated Layer Check:

The Kubernetes Aggregated Layer is enabled - OK

Found multiple snapshot API group versions, using preferred.

CSI Capabilities Check:

Using CSI GroupVersion snapshot.storage.k8s.io/v1 - OK

Validate Generic Volume Snapshot:

Pod created successfully - OK

GVS Backup command executed successfully - OK

Pod deleted successfully - OK

serviceaccount "k10-primer" deleted

clusterrolebinding.rbac.authorization.k8s.io "k10-primer" deleted

job.batch "k10primer" deleted安装测试用的 workload -- mysql

# add bitnami repo

$ helm repo add bitnami https://charts.bitnami.com/bitnami

# helm install mysql

$ helm install mysql-release bitnami/mysql --namespace mysql --create-namespace \

--set auth.rootPassword='Start123' \

--set primary.persistence.size=10Gi

# check mysql status

$ kubectl get all -n mysql

NAME READY STATUS RESTARTS AGE

pod/mysql-release-0 1/1 Running 0 99s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/mysql-release ClusterIP 10.104.247.217 <none> 3306/TCP 48m

service/mysql-release-headless ClusterIP None <none> 3306/TCP 48m

NAME READY AGE

statefulset.apps/mysql-release 1/1 48m2. Kasten K10 的安装与配置

2.1. 安装 Kasten K10

# Add helm chart for Kasten

$ helm repo add kasten https://charts.kasten.io/

# Check if kasten repo already added

$ helm repo list

# Fetch the charts

$ helm fetch kasten/k10 --version=5.0.11

# install kasten with following parameters

$ helm install k10 k10-6.0.2.tgz --namespace kasten-io --create-namespace --set global.airgapped.repository=ccr.ccs.tencentyun.com/kasten \

--set auth.tokenAuth.enabled=true \

--set metering.mode=airgap \

--set injectKanisterSidecar.enabled=true \

--set-string injectKanisterSidecar.namespaceSelector.matchLabels.k10/injectKanisterSidecar=true \

--set global.persistence.storageClass=csi-hostpath-sc

$ helm install k10 k10-6.0.2.tgz --namespace kasten-io --create-namespace --set global.airgapped.repository=public.ecr.aws/kasten-images \

--set auth.tokenAuth.enabled=true \

--set metering.mode=airgap \

--set injectKanisterSidecar.enabled=true \

--set-string injectKanisterSidecar.namespaceSelector.matchLabels.k10/injectKanisterSidecar=true \

--set global.persistence.storageClass=csi-hostpath-sc

# 要确定所有的 Pod 都是 Running 状态才能进行下一步

$ kubectl get po -n kasten-io

NAME READY STATUS RESTARTS AGE

aggregatedapis-svc-5cb69ccb87-f728h 1/1 Running 0 7m18s

auth-svc-59f8597cd-kkm87 1/1 Running 0 7m19s

catalog-svc-5cc7f8c865-g9wqt 2/2 Running 0 7m18s

controllermanager-svc-64c5fcf9c4-ncwjf 1/1 Running 0 7m19s

crypto-svc-cf68bdd99-n528w 4/4 Running 0 7m19s

dashboardbff-svc-669449d5f4-kxhrw 1/1 Running 0 7m19s

executor-svc-56d8fff95d-7q9gf 2/2 Running 0 7m19s

executor-svc-56d8fff95d-kc769 2/2 Running 0 7m19s

executor-svc-56d8fff95d-zzmhm 2/2 Running 0 7m19s

frontend-svc-7bff945b6c-mndws 1/1 Running 0 7m19s

gateway-78946b9fd7-rsq4t 1/1 Running 0 7m19s

jobs-svc-c556bffd7-jgwng 1/1 Running 0 7m18s

k10-grafana-58b85c856d-8pctz 1/1 Running 0 7m19s

kanister-svc-6db7f4d4bc-nhxdw 1/1 Running 0 7m19s

logging-svc-7b87c6dc8-zqfgg 1/1 Running 0 7m19s

metering-svc-b45bfb675-xzp26 1/1 Running 0 7m19s

prometheus-server-64877fdd68-z4ws6 2/2 Running 0 7m19s

state-svc-786864ddc6-tk99x 2/2 Running 0 7m18s

# 设置采 Kasten k10 的 Gateway Service 暴露出来,用 Node Port 方式访问 GUI

$ kubectl expose service gateway -n kasten-io --type=NodePort --name=gateway-nodeport

$ kubectl get svc -n kasten-io gateway-nodeport

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

gateway-nodeport NodePort 10.97.233.211 <none> 8000:32612/TCP 93s

$ kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

mars-k8s-1 Ready control-plane,master 20h v1.23.1 172.16.60.90 <none> Ubuntu 22.04.1 LTS 5.15.0-52-generic docker://20.10.21 ☞ 浏览器访问 K10 UI: http://172.16.60.90:32612/k10/#

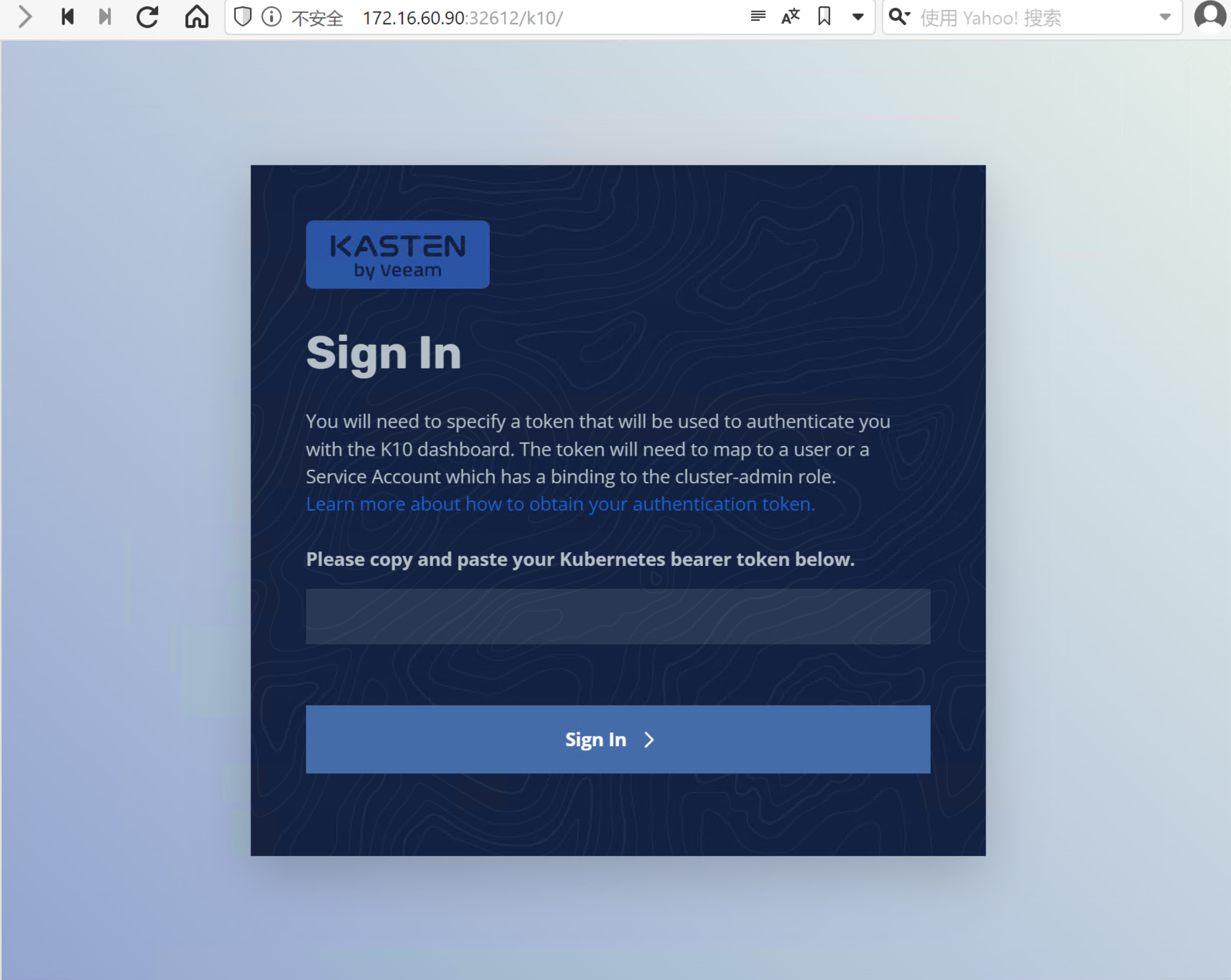

2.2. 登录 Kasten K10 GUI 管理界面

☞ 浏览器访问:http://172.16.60.90:32612/k10/#

☞ 用以下命令得到 Token, 以登录到 K10 控制台

$ sa_secret=$(kubectl get serviceaccount k10-k10 -o jsonpath="{.secrets[0].name}" --namespace kasten-io) && kubectl get secret $sa_secret --namespace kasten-io -ojsonpath="{.data.token}{'\n'}" | base64 --decode☞ 以登录到 K10 控制台

2.3. 安装 MinIO 做为存储库

# Create MinIO Namespace

$ kubectl create ns minio

# Create MinIO by manifest

$ cat <<EOF | kubectl -n minio create -f -

apiVersion: v1

kind: Service

metadata:

name: minio

spec:

ports:

- port: 9000

targetPort: 9000

selector:

app: minio

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: minio-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 30Gi

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: minio

spec:

serviceName: "minio"

replicas: 1

selector:

matchLabels:

app: minio

template:

metadata:

labels:

app: minio

spec:

volumes:

- name: data

persistentVolumeClaim:

claimName: minio-pvc

containers:

- name: minio

volumeMounts:

- name: data

mountPath: "/data"

image: minio/minio:RELEASE.2020-12-10T01-54-29Z

args:

- server

- /data

env:

- name: MINIO_ACCESS_KEY

value: "minio"

- name: MINIO_SECRET_KEY

value: "minio123"

ports:

- containerPort: 9000

hostPort: 9000

EOF

#Check if MinIO Service running normally

$ kubectl get all -n minio

NAME READY STATUS RESTARTS AGE

pod/minio-0 1/1 Running 0 21m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/minio ClusterIP 10.110.230.23 <none> 9000/TCP 21m

NAME READY AGE

statefulset.apps/minio 1/1 21m

#Expose MinIO Service for UI Acess

$ kubectl expose service minio -n minio --type=NodePort --name=minio-nodeport

$ kubectl get svc -n minio && kubectl get node -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

minio ClusterIP 10.110.230.23 <none> 9000/TCP 23m

minio-nodeport NodePort 10.106.243.153 <none> 9000:31106/TCP 29s

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

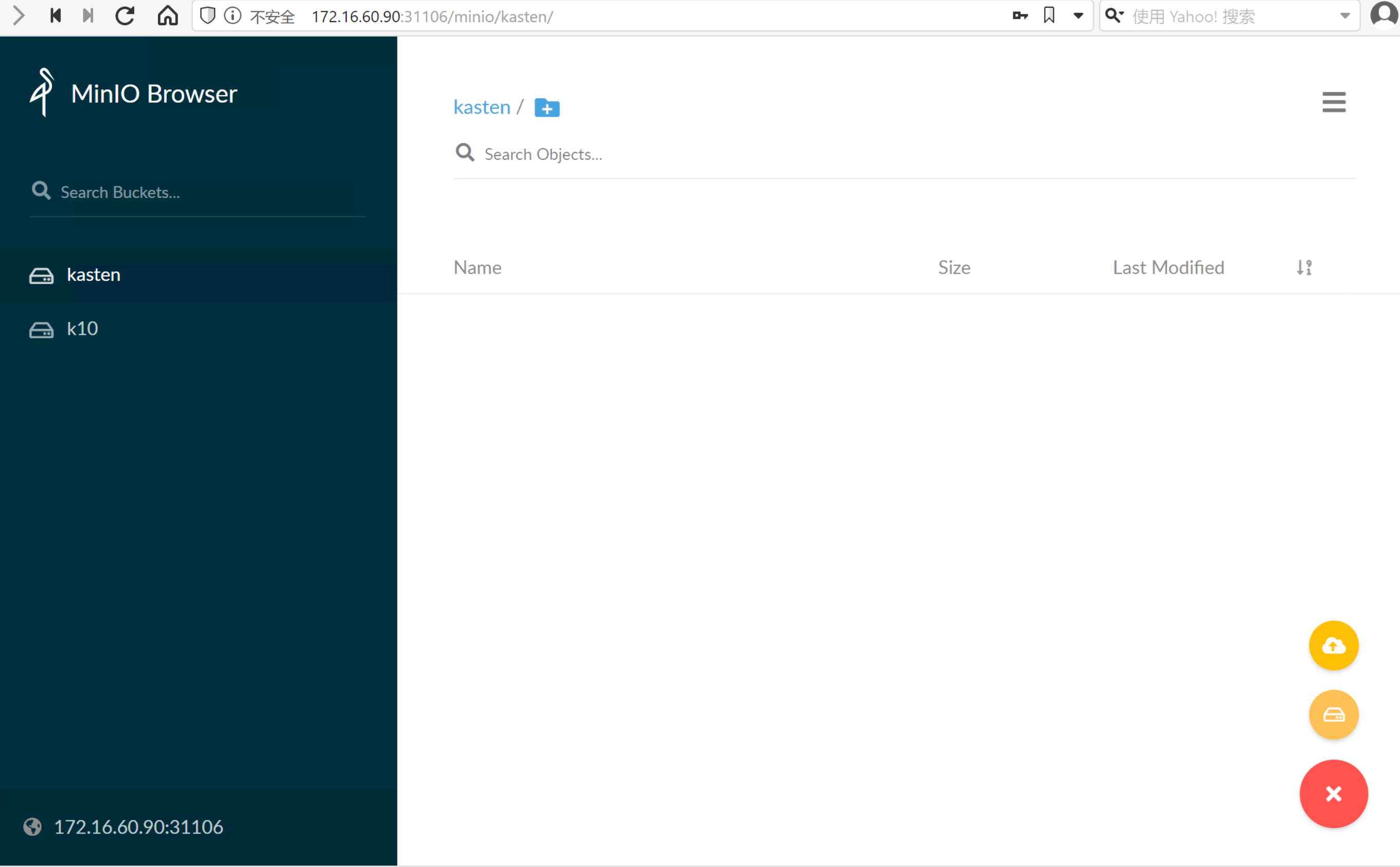

mars-k8s-1 Ready control-plane,master 21h v1.23.1 172.16.60.90 <none> Ubuntu 22.04.1 LTS 5.15.0-52-generic docker://20.10.21☞ 浏览器访问:http://172.16.60.90:31106/

Access Key: minio

Secret Key: minio123

☞ 点击硬盘图标,Create 名为 Kasten 的 Bucket

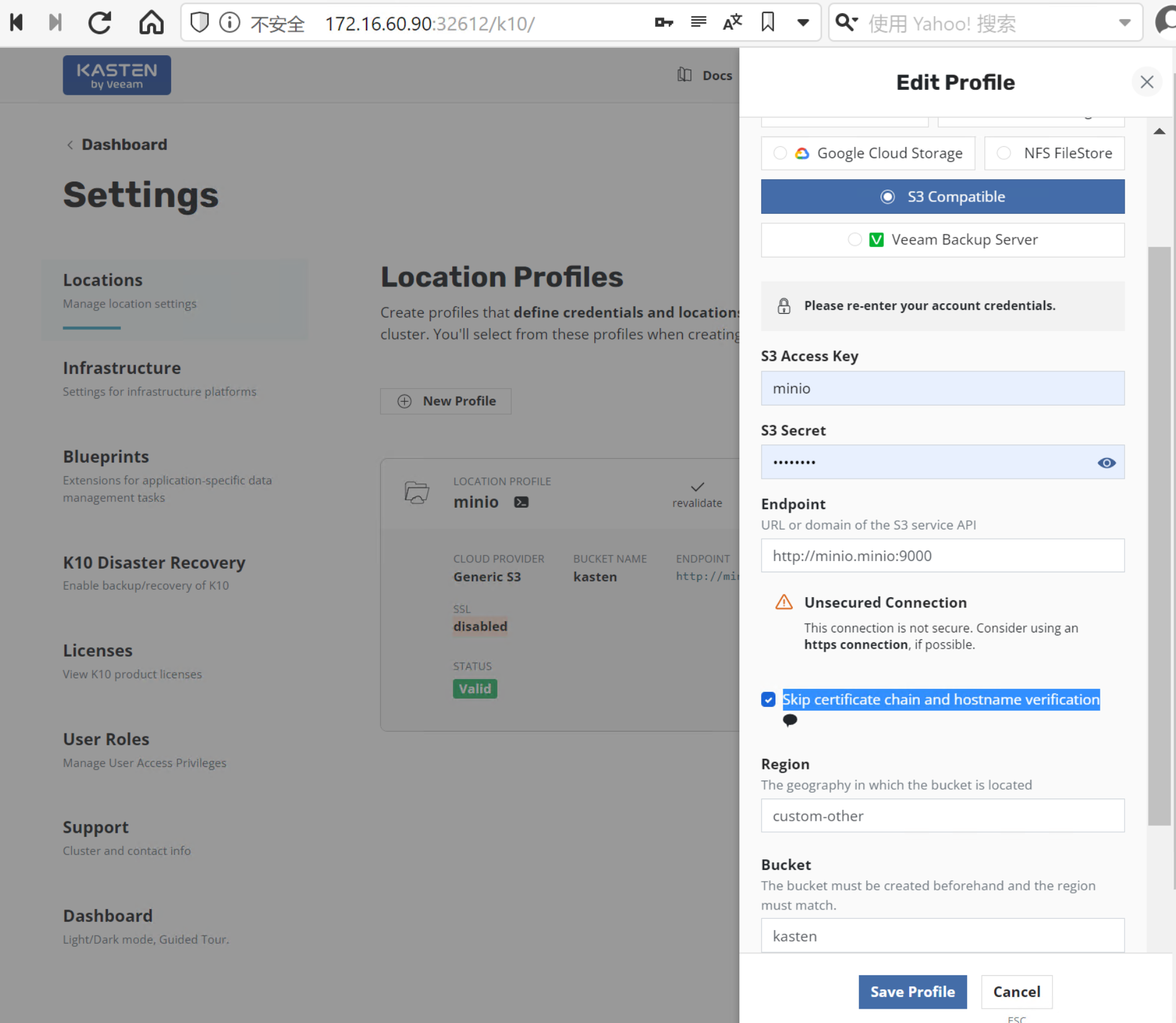

2.4. 在 K10 中配置MinIO做为存储库

在 K10 控制台配置存储库

主界面 Settings > Locations > Location Profiles > New Profiles

设定如下参数,点击 “Save Profile”

- Profile Name: minio

- Cloud Storage Provider: S3 Compatible

- Access Key: minio

- Secret Key: minio123

- Endpoint: http://minio.minio:9000

- Check ☑ Skip certificate chain and hostname verification

- Region:

- Bucket: kasten

3. 利用快照进行应用程序的备份与恢复

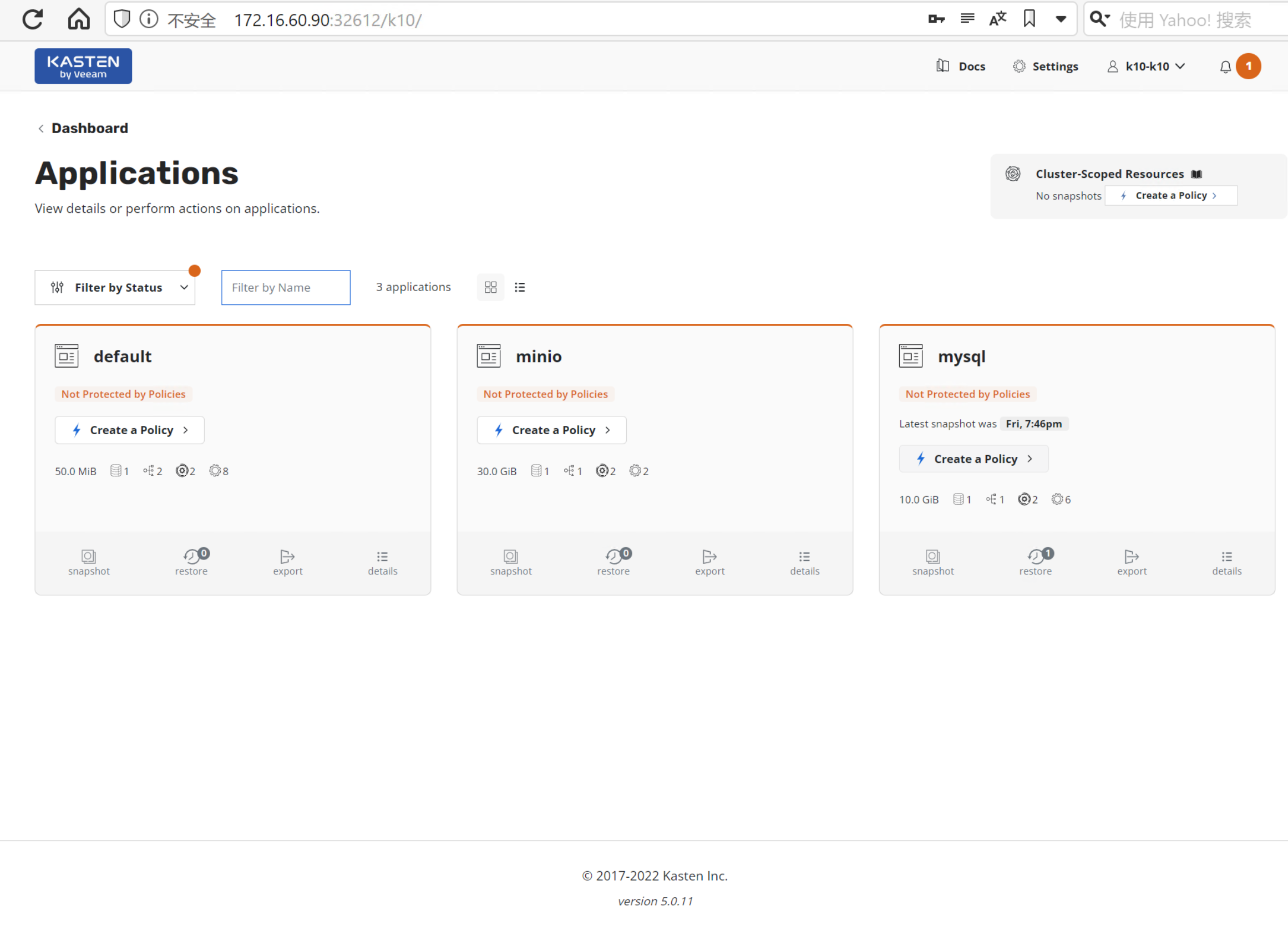

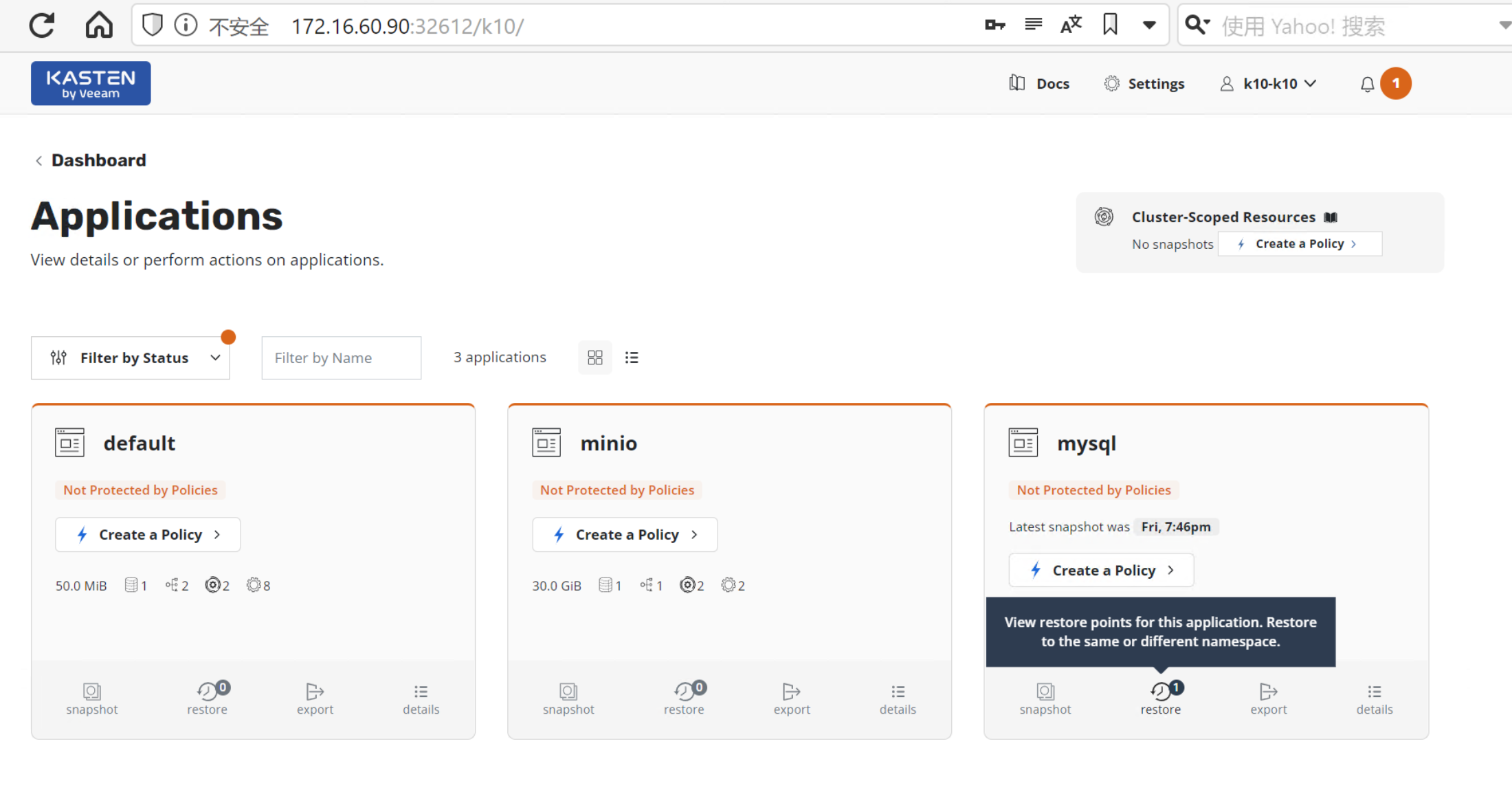

3.1. K10 应用程序发现

主界面 > Applications > mysql 找到我们需要备份的应用

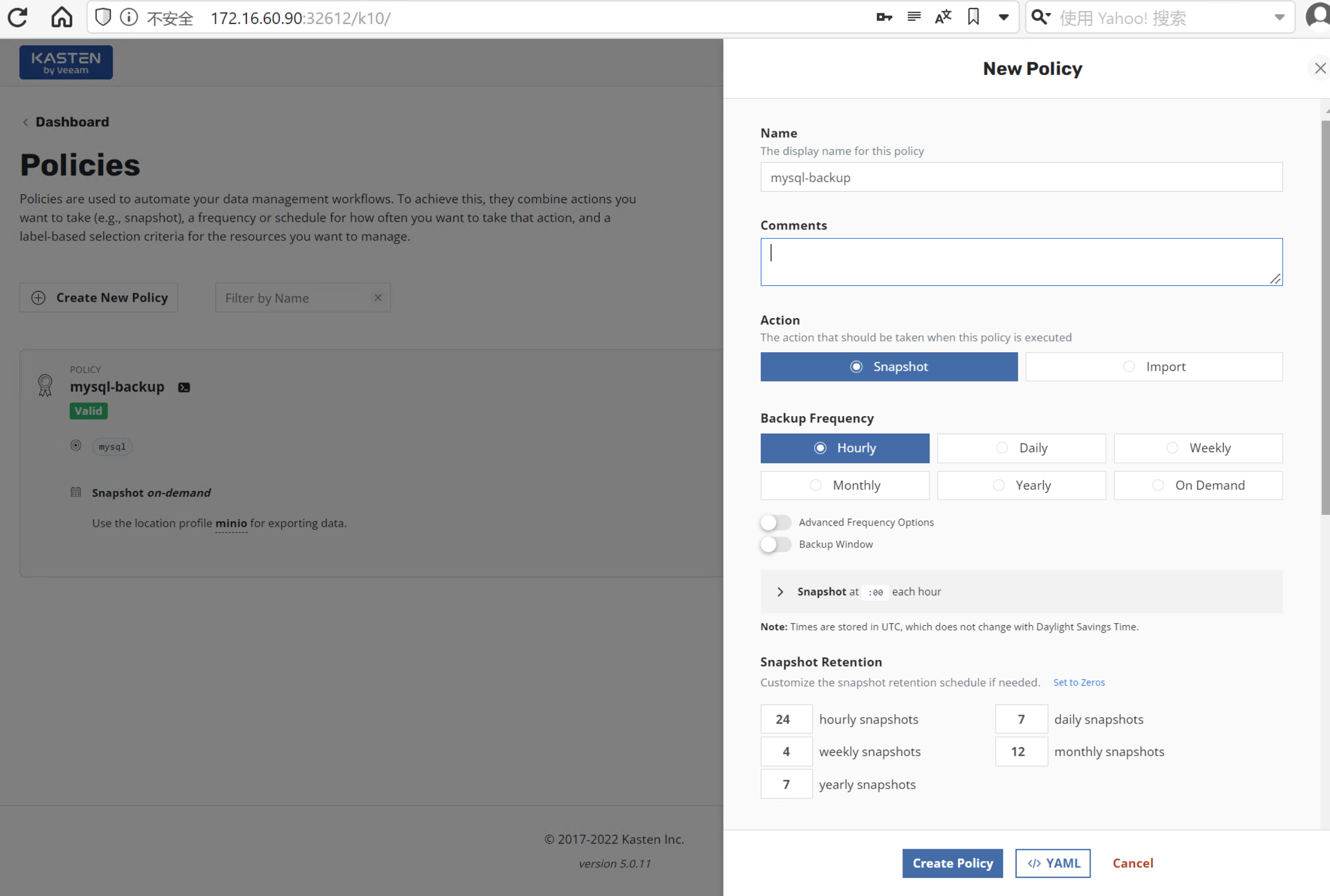

3.2. 创建 Policy 保护 mysql 应用

点击 Crete Policy > 创建备份的策略

选项一切都可默认,点击Create Policy

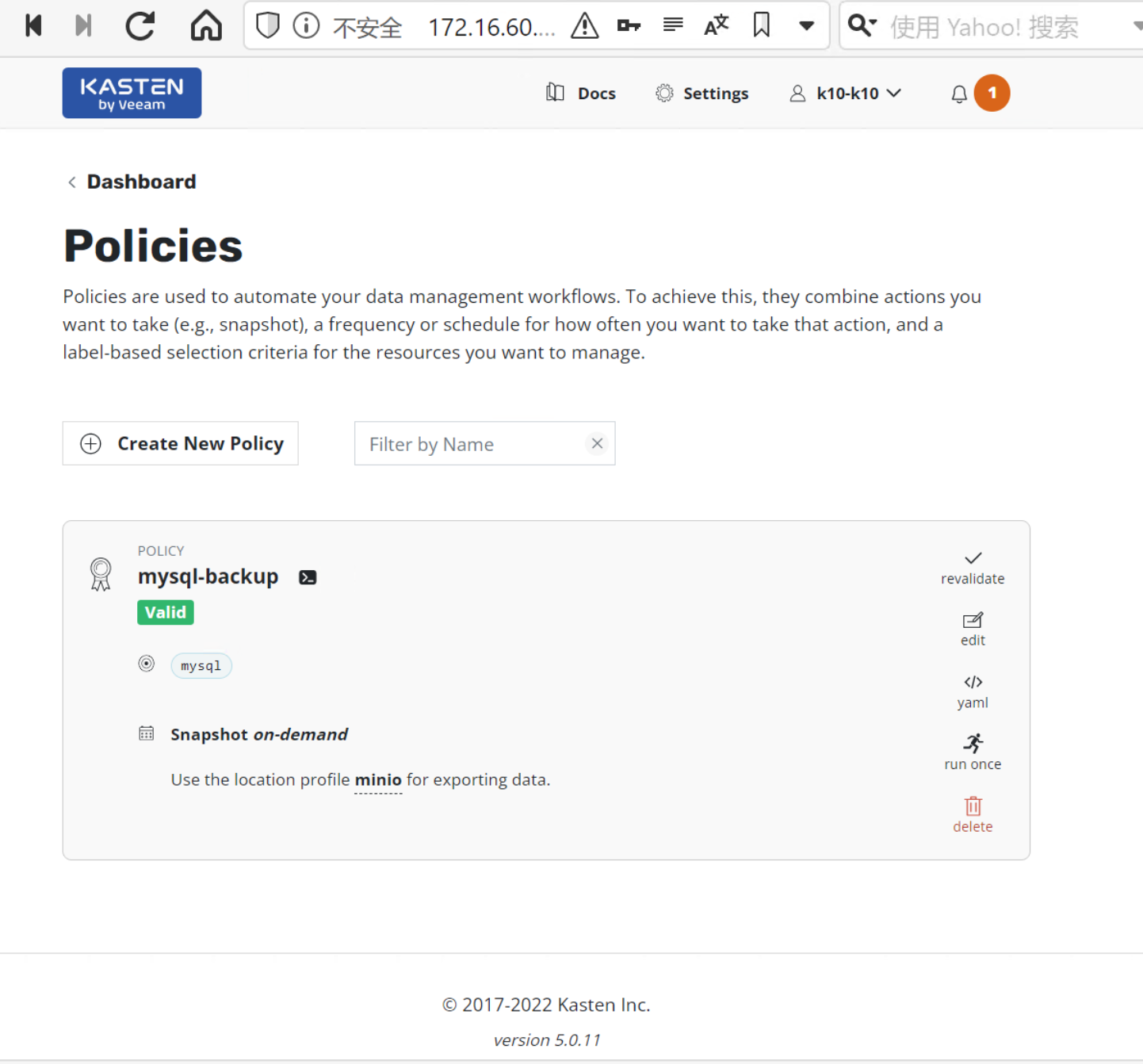

3.3. 执行备份策略

主界面 > Policies > mysql-backup > Run Once 执行应用备份

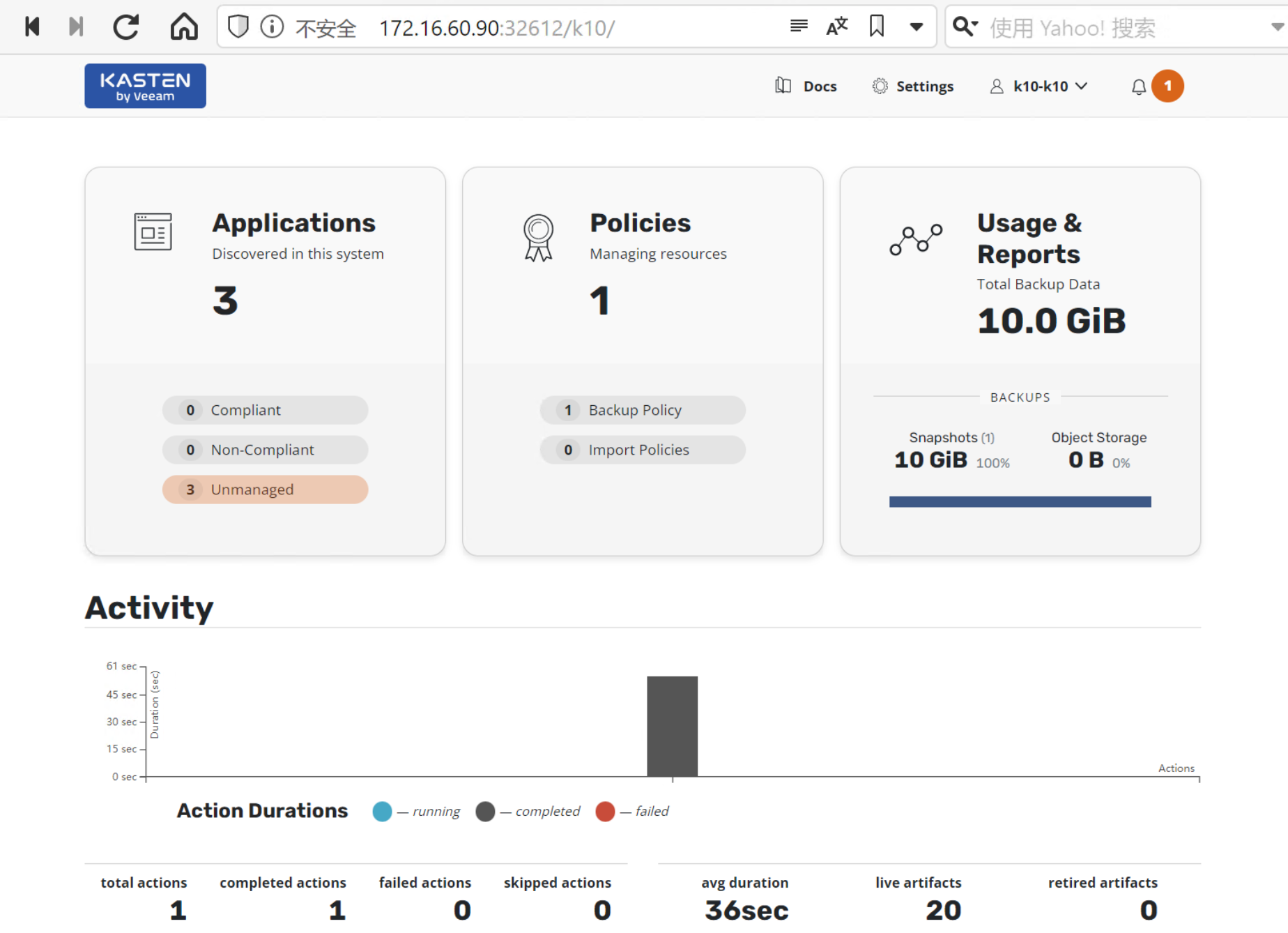

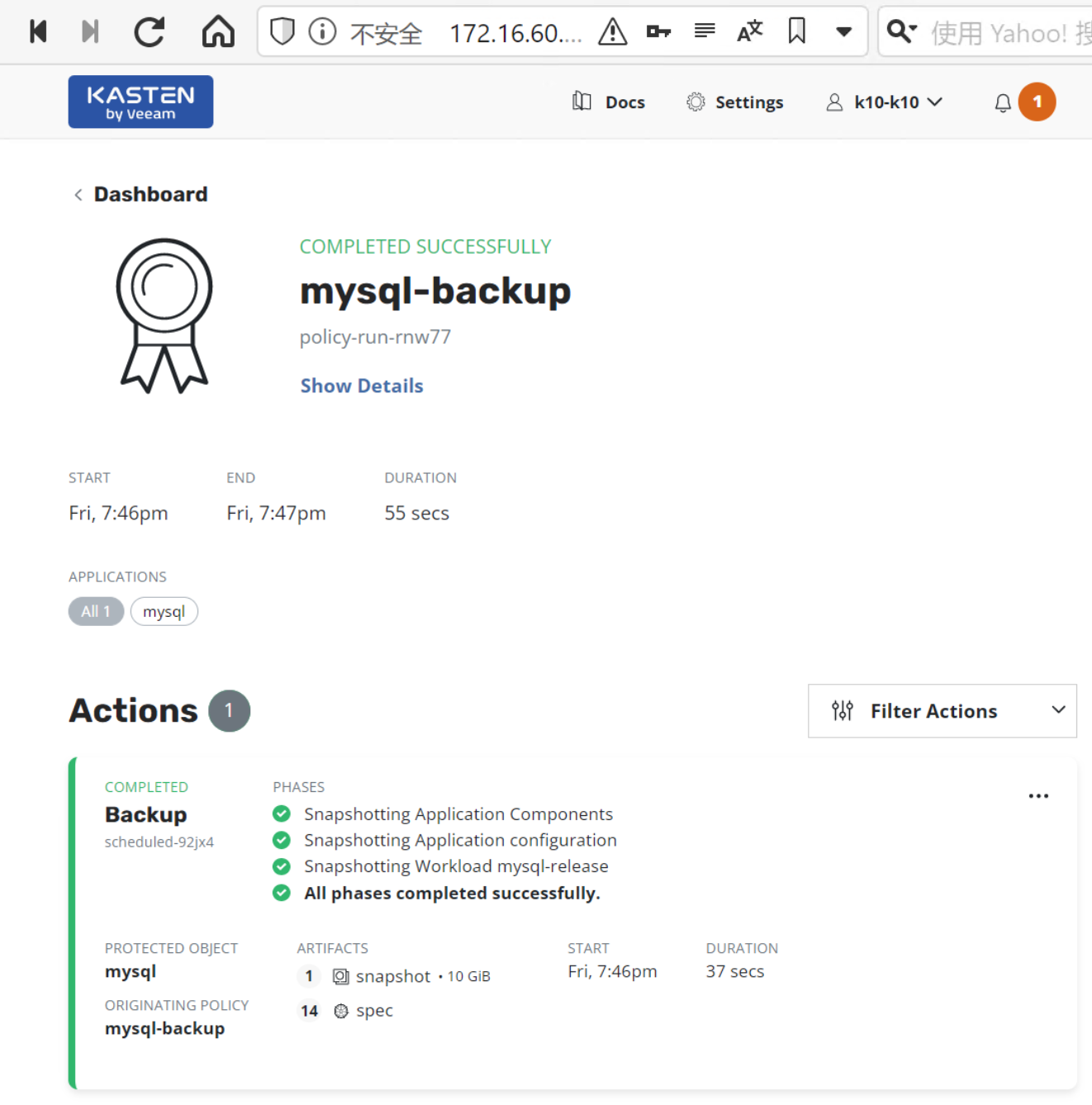

3.4. 查看 Dashboard 与命令行中查看备份的执行情况

主界面 > Actions > Policy Run > 点击运行任务查看备份结果

在命令行上查看快照调用的情况

# 查看 volumesnapshot

$ kubectl get volumesnapshot -n mysql

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

k10-csi-snap-r4tn4qk94h674rpg true data-mysql-release-0 10Gi csi-hostpath-snapclass snapcontent-af9a8c05-b077-4294-aa4f-e7e1d4265759 2d 2d

# 查看 volumesnapshotcontent

$ kubectl get volumesnapshotcontent -n mysql

NAME READYTOUSE RESTORESIZE DELETIONPOLICY DRIVER VOLUMESNAPSHOTCLASS VOLUMESNAPSHOT VOLUMESNAPSHOTNAMESPACE AGE

snapcontent-af9a8c05-b077-4294-aa4f-e7e1d4265759 true 10737418240 Delete hostpath.csi.k8s.io csi-hostpath-snapclass k10-csi-snap-r4tn4qk94h674rpg mysql 2d3.5. 在 K10 中还原 mysql 数据到另一个 Namespace

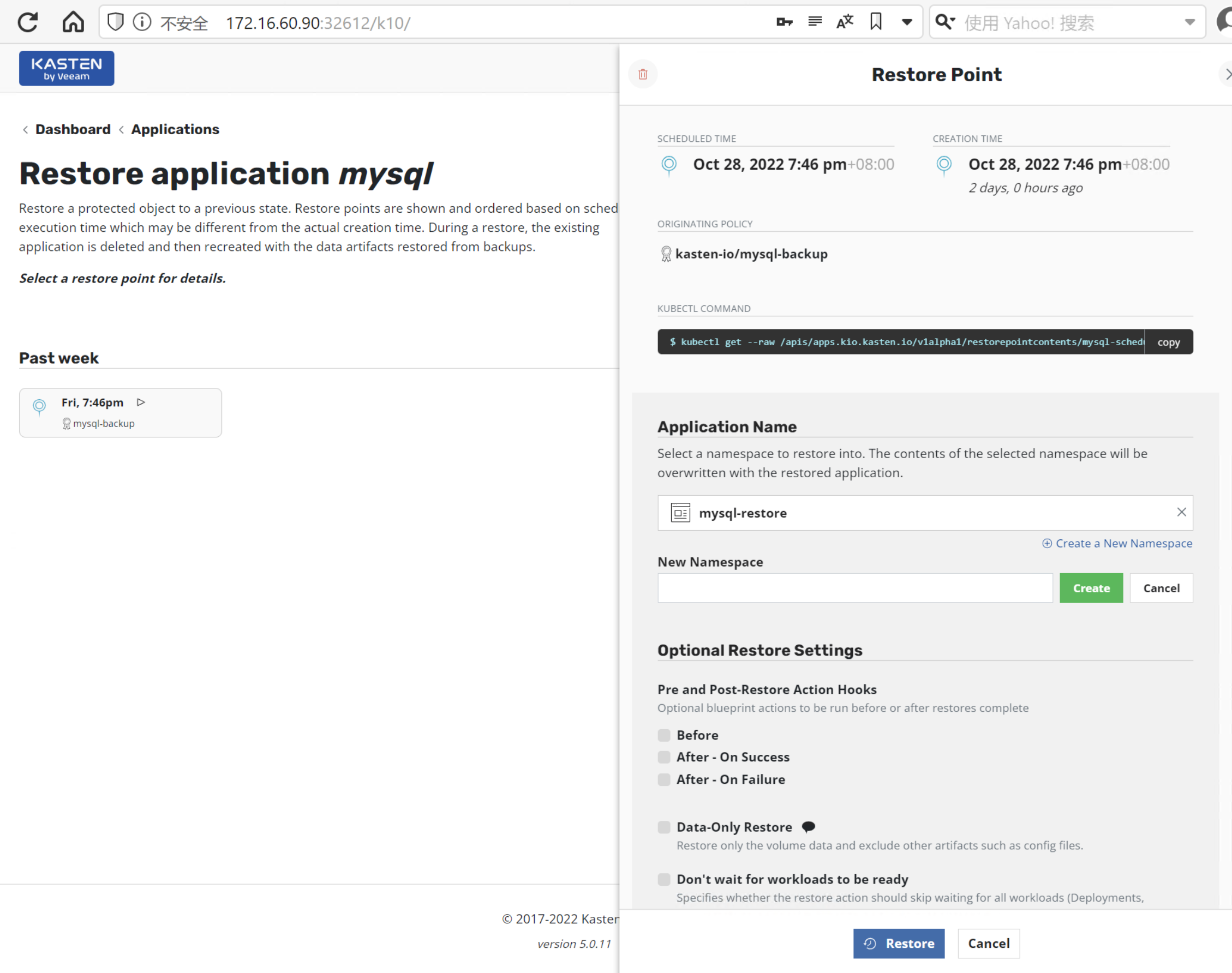

主界面 > Applications > mysql > 点击 Restore查看还原点

点击还原点 > 在 Applications Name 下 > 点击 Create a New Namespace > 输入 mysql-restore > 点击 Create > 点击 Restore

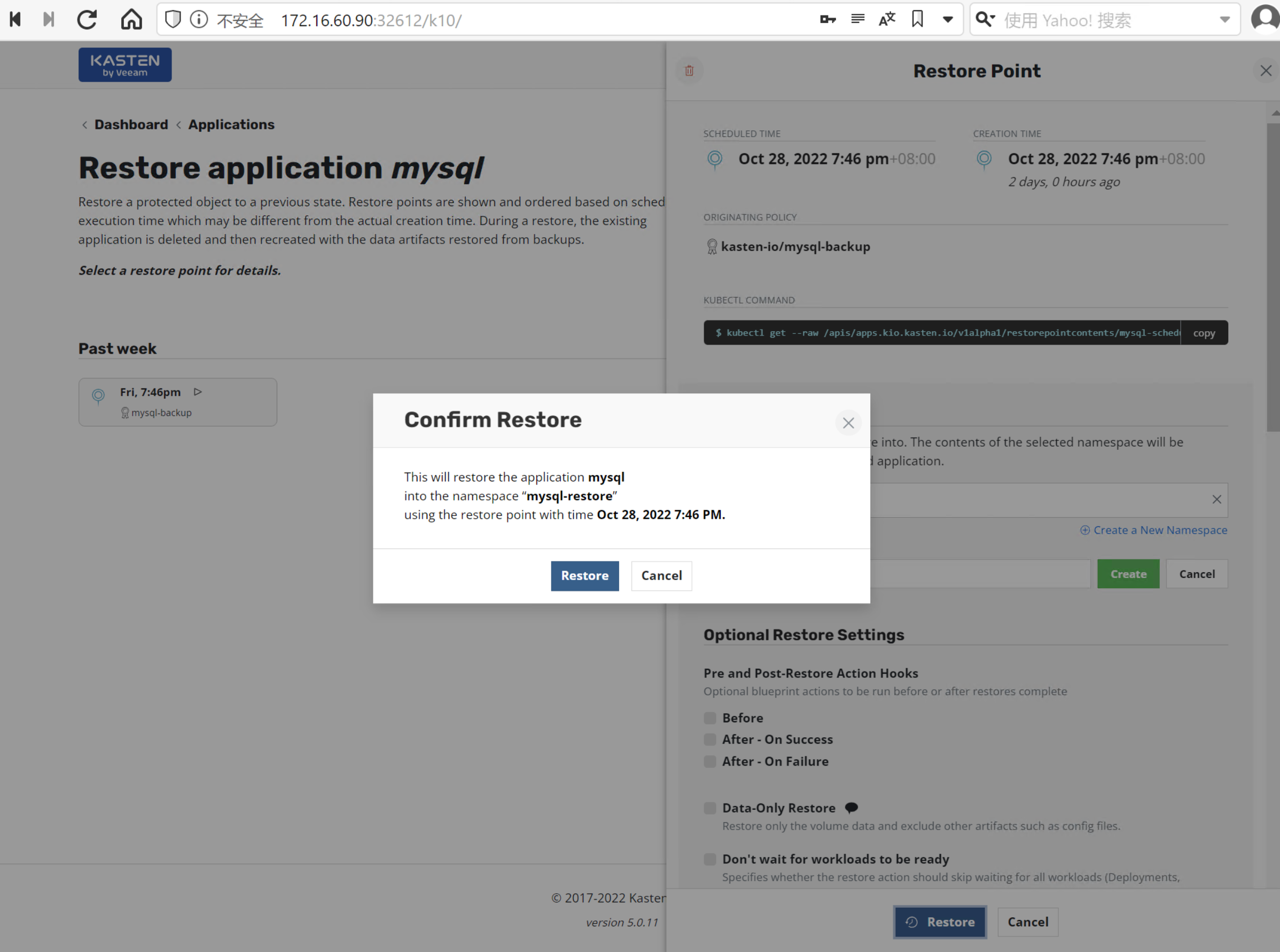

在 Confirm Restore 对话框中, 点击 Restore

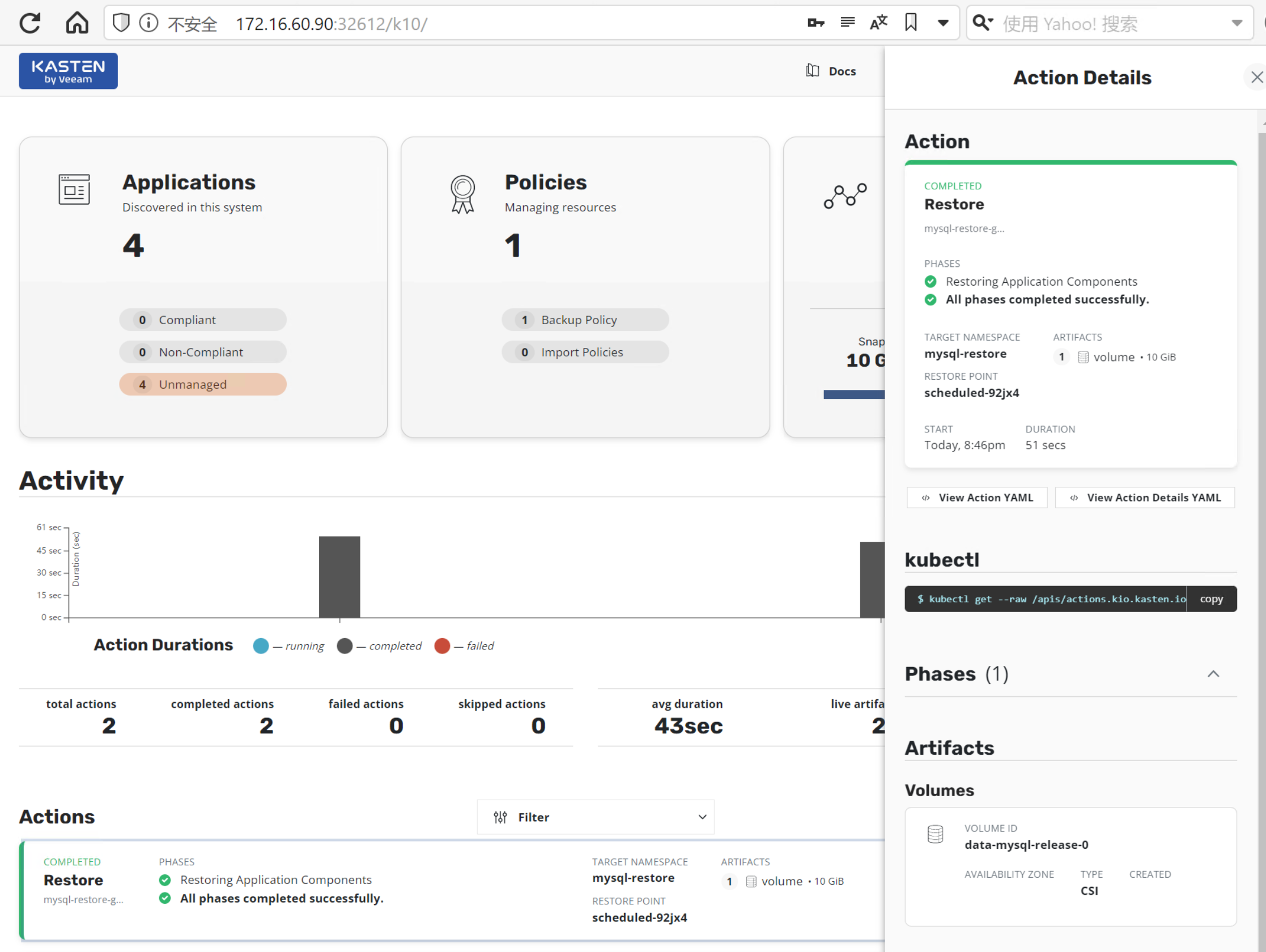

3.6.查看 Dashboard 上 mysql-restore 数据还原的情况

3.7.对数据还原的情况进行分析

此时,让我们来观察一下快照的还原机制,通过查看 volumesnapshotcontent, 我们可以发现这里有一个名为 k10-csi-snap-wh9m8wgjg5gzj2x4 的快照,它的快照类为 k10-clone-csi-hostpath-snapclass, DELETIONPOLICY 为 Retain, 很明显这是 K10 为还原而创建的。同时,在对这个 mysql-restore 的 PVC 的描述时,可以发现,这就是源自于这个快照的。

$ kubectl get volumesnapshotcontent -n mysql-restore

NAME READYTOUSE RESTORESIZE DELETIONPOLICY DRIVER VOLUMESNAPSHOTCLASS VOLUMESNAPSHOT VOLUMESNAPSHOTNAMESPACE AGE

k10-csi-snap-wh9m8wgjg5gzj2x4-content-9e561840-c004-4e0f-88e9-6c7374438a2f true 10737418240 Retain hostpath.csi.k8s.io k10-clone-csi-hostpath-snapclass k10-csi-snap-wh9m8wgjg5gzj2x4 mysql-restore 2m41s

snapcontent-af9a8c05-b077-4294-aa4f-e7e1d4265759 true 10737418240 Delete hostpath.csi.k8s.io csi-hostpath-snapclass k10-csi-snap-r4tn4qk94h674rpg mysql 2d1h

$ kubectl get pvc -n mysql-restore

mysql-restore data-mysql-release-0 Bound pvc-13d8ed12-0173-4f59-b7d5-b5b01d98ea16 1 csi-hostpath-sc 4m51s

$ kubectl -n mysql-restore describe pvc data-mysql-release-0

Name: data-mysql-release-0

Namespace: mysql-restore

StorageClass: csi-hostpath-sc

Status: Bound

Volume: pvc-13d8ed12-0173-4f59-b7d5-b5b01d98ea16

Labels: app.kubernetes.io/component=primary

app.kubernetes.io/instance=mysql-release

app.kubernetes.io/name=mysql

Annotations: pv.kubernetes.io/bind-completed: yes

pv.kubernetes.io/bound-by-controller: yes

volume.beta.kubernetes.io/storage-provisioner: hostpath.csi.k8s.io

volume.kubernetes.io/storage-provisioner: hostpath.csi.k8s.io

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 10Gi

Access Modes: RWO

VolumeMode: Filesystem

DataSource:

APIGroup: snapshot.storage.k8s.io

Kind: VolumeSnapshot

Name: k10-csi-snap-wh9m8wgjg5gzj2x4

Used By: mysql-release-0

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ExternalProvisioning 7m29s persistentvolume-controller waiting for a volume to be created, either by external provisioner "hostpath.csi.k8s.io" or manually created by system administrator

Normal Provisioning 7m29s hostpath.csi.k8s.io_csi-hostpathplugin-0_38bf5516-630c-48d9-abf4-56ec8da0599b External provisioner is provisioning volume for claim "mysql-restore/data-mysql-release-0"

Normal ProvisioningSucceeded 7m28s hostpath.csi.k8s.io_csi-hostpathplugin-0_38bf5516-630c-48d9-abf4-56ec8da0599b Successfully provisioned volume pvc-13d8ed12-0173-4f59-b7d5-b5b01d98ea164. 总结

本文中我们利用一个简单的 Ubuntu 虚拟机,创建了存储类与快照类,并利用 Kasten K10 对调用快照进行备份与还原,使大家在做 K10 的实验时,可以得到与企业级存储快照同样的体验,欢迎关注并转发!

5. 参考链接

Ubuntu 下载链接

https://ubuntu.com/download

external snapshotter controller

https://github.com/kubernetes-csi/external-snapshotter/tree/master/deploy/kubernetes/snapshot-controller

external snapshotter crd

https://github.com/kubernetes-csi/external-snapshotter/tree/v5.0.1/client/config/crd

Kasten Document

https://docs.kasten.io/latest/kubectl get --raw /apis/apps.kio.kasten.io/v1alpha1/

{"kind":"APIResourceList","apiVersion":"v1","groupVersion":"apps.kio.kasten.io/v1alpha1","resources":[{"name":"applications","singularName":"","namespaced":true,"kind":"Application","verbs":["get","list"]},{"name":"applications/details","singularName":"","namespaced":true,"kind":"Application","verbs":["get"]},{"name":"clusterrestorepoints","singularName":"","namespaced":false,"kind":"ClusterRestorePoint","verbs":["delete","get","list"]},{"name":"clusterrestorepoints/details","singularName":"","namespaced":false,"kind":"ClusterRestorePoint","verbs":["get"]},{"name":"restorepointcontents","singularName":"","namespaced":false,"kind":"RestorePointContent","verbs":["delete","get","list"]},{"name":"restorepointcontents/details","singularName":"","namespaced":false,"kind":"RestorePointContent","verbs":["get"]},{"name":"restorepoints","singularName":"","namespaced":true,"kind":"RestorePoint","verbs":["create","delete","get","list"]},{"name":"restorepoints/details","singularName":"","namespaced":true,"kind":"RestorePoint","verbs":["get"]}]}

> kubectl get -n kasten-io reports.reporting.kio.kasten.io -oyaml

apiVersion: v1

items:

- apiVersion: reporting.kio.kasten.io/v1alpha1

kind: Report

metadata:

creationTimestamp: "2023-03-22T09:46:18Z"

generateName: scheduled-v4bsr-

generation: 1

labels:

k10.kasten.io/doNotRetire: "true"

name: scheduled-v4bsr-rrhwk

namespace: kasten-io

resourceVersion: "63811398"

uid: afe8c694-d9db-4faa-afa3-3d9d4583c4e3

results:

actions:

countStats:

backup:

cancelled: 0

completed: 0

failed: 0

skipped: 0

backupCluster:

cancelled: 0

completed: 1

failed: 0

skipped: 0

export:

cancelled: 0

completed: 0

failed: 0

skipped: 0

import:

cancelled: 0

completed: 0

failed: 0

skipped: 0

report:

cancelled: 0

completed: 0

failed: 0

skipped: 0

restore:

cancelled: 0

completed: 0

failed: 0

skipped: 0

restoreCluster:

cancelled: 0

completed: 0

failed: 0

skipped: 0

run:

cancelled: 0

completed: 1

failed: 0

skipped: 0

compliance:

applicationCount: 30

compliantCount: 0

nonCompliantCount: 0

unmanagedCount: 30

general:

authType: token

clusterId: 0a25e9a4-1de6-4e9f-bb6a-cf89af14e804

infraType:

isOpenShift: false

provider: unknown

k8sVersion: v1.23.13+rke2r1

k10Version: 5.5.6

k10namespace: kasten-io

k10Services:

- diskUsage:

freeBytes: 6490685440

freePercentage: 15

usedBytes: 36436967424

name: logging

- diskUsage:

freeBytes: 6490685440

freePercentage: 15

usedBytes: 36436967424

name: catalog

- diskUsage:

freeBytes: 6490685440

freePercentage: 15

usedBytes: 36436967424

name: jobs

licensing:

expiry: null

nodeCount: 3

nodeLimit: 5

status: Valid

type: Starter

policies:

k10DR:

backupFrequency: '@onDemand'

immutability:

protection: Disabled

protectionDays: 0

profile: minio

status: Enabled

summaries:

- actions:

- backup

frequency: '@hourly'

name: cluster-scoped-resources-backup

namespace: kasten-io

profileNames: []

validation: Success

- actions:

- report

frequency: '@daily'

name: k10-system-reports-policy

namespace: kasten-io

profileNames: []

validation: Success

- actions:

- backup

frequency: '@onDemand'

name: pwms-backup

namespace: kasten-io

profileNames:

- minio

validation: Success

profiles:

summaries:

- bucket: kasten

endpoint: http://10.0.4.3:9000

immutability:

protection: Disabled

protectionDays: 0

name: minio

objectStoreType: S3

region: local

sslVerification: SkipVerification

type: Location

validation: Success

storage:

objectStorage:

count: 8

logicalBytes: 4249154357

physicalBytes: 16511882

pvcStats:

pvcBytes: 166429982720

pvcCount: 19

snapshotStorage:

count: 0

logicalBytes: 0

physicalBytes: 0

spec:

reportTimestamp: "2023-03-22T09:46:14Z"

statsIntervalDays: 1

statsIntervalEndTimestamp: "2023-03-22T09:46:14Z"

statsIntervalStartTimestamp: "2023-03-21T09:46:14Z"

kind: List

metadata:

resourceVersion: ""

selfLink: ""

kubectl get storagerepositories.repositories.kio.kasten.io kopia-volumedata-repository-trvcz5f4jv --namespace kasten-io -oyaml

apiVersion: repositories.kio.kasten.io/v1alpha1

kind: StorageRepository

metadata:

creationTimestamp: "2023-03-03T14:32:53Z"

labels:

k10.kasten.io/appName: pwms

k10.kasten.io/exportProfile: minio

name: kopia-volumedata-repository-trvcz5f4jv

namespace: kasten-io

resourceVersion: "533"

uid: 4823062e-b9d0-11ed-b828-d69ba0d3704c

status:

appName: pwms

backendType: kopia

contentType: volumedata

location:

objectStore:

endpoint: http://10.0.4.3:9000

name: kasten

objectStoreType: S3

path: k10/0a25e9a4-1de6-4e9f-bb6a-cf89af14e804/migration/repo/7bafde09-dd63-41bb-80ab-d3da307a1b79/

pathType: Directory

region: local

skipSSLVerify: true

type: ObjectStorekubectl get profiles.config.kio.kasten.io --namespace kasten-io

NAME STATUS AGE

minio Success 18d

kubectl get profiles.config.kio.kasten.io minio --namespace kasten-io -oymal

error: unable to match a printer suitable for the output format "ymal", allowed formats are: custom-columns,custom-columns-file,go-template,go-template-file,json,jsonpath,jsonpath-as-json,jsonpath-file,name,template,templatefile,wide,yaml

kubectl get profiles.config.kio.kasten.io minio --namespace kasten-io -oyaml

apiVersion: config.kio.kasten.io/v1alpha1

kind: Profile

metadata:

creationTimestamp: "2023-03-03T14:30:23Z"

generation: 2

name: minio

namespace: kasten-io

resourceVersion: "51712136"

uid: 32bbc40e-a12d-4cde-8c24-32e3bcc5154a

spec:

locationSpec:

credential:

secret:

apiVersion: v1

kind: secret

name: k10secret-n7nx8

namespace: kasten-io

secretType: AwsAccessKey

objectStore:

endpoint: http://10.0.4.3:9000

name: kasten

objectStoreType: S3

path: k10/0a25e9a4-1de6-4e9f-bb6a-cf89af14e804/migration

pathType: Directory

region: local

skipSSLVerify: true

type: ObjectStore

type: Location

status:

hash: 2681627176

validation: Success

kubectl get restorepoints.apps.kio.kasten.io --all-namespaces

kubectl get restorepoints.apps.kio.kasten.io --namespace pwms

NAME CREATED AT

manualbackup-q9qds 2023-03-04T03:31:26Z

scheduled-nj5sn 2023-03-04T03:23:46Z

scheduled-2r89m 2023-03-04T03:10:54Z

scheduled-g64r4 2023-03-04T02:43:26Zkubectl get restorepoints.apps.kio.kasten.io manualbackup-q9qds --namespace pwms -oyaml

kubectl get restorepoints.apps.kio.kasten.io manualbackup-q9qds --namespace pwms -oyaml

kubectl get restorepoints.apps.kio.kasten.io scheduled-6wcb7 --namespace nginx-example -oyaml

apiVersion: apps.kio.kasten.io/v1alpha1

kind: RestorePoint

metadata:

creationTimestamp: "2023-03-04T03:31:26Z"

labels:

k10.kasten.io/appName: pwms

k10.kasten.io/appNamespace: pwms

name: manualbackup-q9qds

namespace: pwms

resourceVersion: "535"

uid: 0bac9a5a-ba3d-11ed-b828-d69ba0d3704c

spec:

restorePointContentRef:

name: pwms-manualbackup-q9qds

status:

actionTime: "2023-03-04T03:31:26Z"

logicalSizeBytes: 1030000000

physicalSizeBytes: 271

kubectl get restorepointcontents.apps.kio.kasten.io \

--selector=k10.kasten.io/appName=pwms

kubectl get restorepointcontents.apps.kio.kasten.io \

--selector=k10.kasten.io/appName=pwms

NAME CREATED AT

pwms-manualbackup-q9qds 2023-03-04T03:31:26Z

pwms-scheduled-nj5sn 2023-03-04T03:23:46Z

pwms-scheduled-2r89m 2023-03-04T03:10:54Z

pwms-scheduled-g64r4 2023-03-04T02:43:26Z

kubectl get restorepointcontents.apps.kio.kasten.io pwms-scheduled-nj5sn -oyaml

kubectl get restorepointcontents.apps.kio.kasten.io pwms-scheduled-nj5sn -oyaml

apiVersion: apps.kio.kasten.io/v1alpha1

kind: RestorePointContent

metadata:

creationTimestamp: "2023-03-04T03:23:46Z"

labels:

k10.kasten.io/appName: pwms

k10.kasten.io/appNamespace: pwms

k10.kasten.io/isRunNow: "true"

k10.kasten.io/policyName: pwms-backup

k10.kasten.io/policyNamespace: kasten-io

k10.kasten.io/runActionName: policy-run-mdrz4

name: pwms-scheduled-nj5sn

resourceVersion: "426"

uid: f90630ed-ba3b-11ed-b828-d69ba0d3704c

status:

actionTime: "2023-03-04T03:23:46Z"

restorePointRef:

name: scheduled-nj5sn

namespace: pwms

scheduledTime: "2023-03-04T03:23:39Z"

state: Boundkubectl get restorepoints.apps.kio.kasten.io --all-namespaces

NAMESPACE NAME CREATED AT

ns05 backup-ns05-hqxzw 2023-03-12T15:04:50Z

ns06 backup-ns06-nrfhn 2023-03-12T15:04:50Z

ns07 backup-ns07-nzbbc 2023-03-12T15:04:50Z

ns03 backup-ns03-z5trx 2023-03-12T15:04:49Z

ns04 backup-ns04-xm26r 2023-03-12T15:04:49Z

ns01 backup-ns01-h9qsh 2023-03-12T15:04:48Z

ns02 backup-ns02-xzszg 2023-03-12T15:04:48Z

ns07 backup-ns07-nn5lk 2023-03-12T15:02:35Z

ns05 backup-ns05-f52hr 2023-03-12T15:02:34Z

ns06 backup-ns06-v5kth 2023-03-12T15:02:34Z

ns02 backup-ns02-sr5qv 2023-03-12T15:02:33Z

ns03 backup-ns03-fd55j 2023-03-12T15:02:33Z

ns04 backup-ns04-9zzbr 2023-03-12T15:02:33Z

ns01 backup-ns01-jsgvk 2023-03-12T15:02:32Z

ns05 backup-ns05-dqrnr 2023-03-12T14:36:46Z

ns06 backup-ns06-lzqnt 2023-03-12T14:36:46Z

ns07 backup-ns07-gxntx 2023-03-12T14:36:46Z

ns03 backup-ns03-gxrll 2023-03-12T14:36:45Z

ns04 backup-ns04-7rns9 2023-03-12T14:36:45Z

ns01 backup-ns01-llttn 2023-03-12T14:36:44Z

ns02 backup-ns02-qt67z 2023-03-12T14:36:44Z

ns07 backup-ns07-6crpb 2023-03-12T13:44:52Z

ns05 backup-ns05-b56vp 2023-03-12T13:44:51Z

ns06 backup-ns06-5dhq5 2023-03-12T13:44:51Z

ns03 backup-ns03-jdzt7 2023-03-12T13:44:50Z

ns04 backup-ns04-p9ffq 2023-03-12T13:44:50Z

ns01 backup-ns01-ng6l4 2023-03-12T13:44:49Z

ns02 backup-ns02-l4rps 2023-03-12T13:44:49Z

ns01 backup-ns01-xt2x6 2023-03-12T13:43:55Z

pwms manualbackup-q9qds 2023-03-04T03:31:26Z

pwms scheduled-nj5sn 2023-03-04T03:23:46Z

pwms scheduled-2r89m 2023-03-04T03:10:54Z

pwms scheduled-g64r4 2023-03-04T02:43:26Z

kubectl get restorepoints.apps.kio.kasten.io manualbackup-q9qds --namespace pwms -oyaml |grep -E 'logicalSizeBytes|physicalSizeBytes'

kubectl get restorepoints.apps.kio.kasten.io scheduled-7s4k72q6gw --namespace mysql-restore -oyaml

for restorepoint in $(kubectl get restorepoints.apps.kio.kasten.io --all-namespaces |wc -l)

do

echo "file: $restorepoints"

done

kubectl get restorepoints.apps.kio.kasten.io --all-namespaces > 1.txt

export text_path=1.txt

cat $text_path |while read i

do

j=echo $i | awk '{print $1}'

k=echo $i | awk '{print $2}'

echo $j

echo $k

kubectl get restorepoints.apps.kio.kasten.io $j --namespace $k -oyaml |grep logicalSizeBytes |awk '{print $2}'

done

l= kubectl get restorepoints.apps.kio.kasten.io $j --namespace $k -oyaml |grep logicalSizeBytes

p= kubectl get restorepoints.apps.kio.kasten.io $j --namespace $k -oyaml |grep physicalSizeBytes

echo $l $p

mars@mars-k8s-1:~$ kubectl get --raw /apis/apps.kio.kasten.io/v1alpha1/applications/items[0]/metadata/name |jq kubectl get pods --all-namespaces -o jsonpath="{.items[].spec.containers[].image}" |\

tr -s '[[:space:]]' '\n' |\

sort |\

uniq -c

kubectl get pods --namespace kasten-io -o jsonpath="{.items[].spec.containers[].image}" |\

tr -s '[[:space:]]' '\n' |\

sort |\

uniq -c

2 image: ccr.ccs.tencentyun.com/kasten/bloblifecyclemanager:6.0.2

2 image: ccr.ccs.tencentyun.com/kasten/crypto:6.0.2

2 image: ccr.ccs.tencentyun.com/kasten/events:6.0.2

2 image: ccr.ccs.tencentyun.com/kasten/garbagecollector:6.0.2ccr.ccs.tencentyun.com/kasten/admin:6.0.2

ccr.ccs.tencentyun.com/kasten/aggregatedapis:6.0.2

ccr.ccs.tencentyun.com/kasten/auth:6.0.2

ccr.ccs.tencentyun.com/kasten/bloblifecyclemanager:6.0.2

ccr.ccs.tencentyun.com/kasten/catalog:6.0.2

ccr.ccs.tencentyun.com/kasten/cephtool:6.0.2

ccr.ccs.tencentyun.com/kasten/configmap-reload:6.0.2

ccr.ccs.tencentyun.com/kasten/controllermanager:6.0.2

ccr.ccs.tencentyun.com/kasten/crypto:6.0.2

ccr.ccs.tencentyun.com/kasten/dashboardbff:6.0.2

ccr.ccs.tencentyun.com/kasten/emissary:6.0.2

ccr.ccs.tencentyun.com/kasten/events:6.0.2

ccr.ccs.tencentyun.com/kasten/executor:6.0.2

ccr.ccs.tencentyun.com/kasten/frontend:6.0.2

ccr.ccs.tencentyun.com/kasten/garbagecollector:6.0.2

ccr.ccs.tencentyun.com/kasten/grafana:6.0.2

ccr.ccs.tencentyun.com/kasten/jobs:6.0.2

ccr.ccs.tencentyun.com/kasten/kanister:6.0.2

ccr.ccs.tencentyun.com/kasten/kanister-tools:k10-0.93.0

ccr.ccs.tencentyun.com/kasten/logging:6.0.2

ccr.ccs.tencentyun.com/kasten/metering:6.0.2

ccr.ccs.tencentyun.com/kasten/prometheus:6.0.2

ccr.ccs.tencentyun.com/kasten/state:6.0.2

ccr.ccs.tencentyun.com/kasten/vbrintegrationapi:6.0.2

aggregatedapis-svc-7965d69fb8-txrpq 1/1 Running 0 8h

auth-svc-7568966449-hzjfn 1/1 Running 0 8h

catalog-svc-7bd57d5f-pjs5f 2/2 Running 0 8h

controllermanager-svc-7bc6ff9bc-zzl6j 1/1 Running 0 8h

crypto-svc-674465d56d-cf4bt 4/4 Running 0 8h

dashboardbff-svc-5bb989cbd9-gjljx 2/2 Running 0 8h

executor-svc-5ff4d49fcf-29gv8 2/2 Running 0 8h

executor-svc-5ff4d49fcf-d4cws 2/2 Running 0 8h

executor-svc-5ff4d49fcf-fx9q6 2/2 Running 0 8h

frontend-svc-5fcb699996-ktv6n 1/1 Running 0 8h

gateway-d747f797-zt4nw 1/1 Running 0 8h

jobs-svc-88b49cfd5-9j96p 1/1 Running 0 8h

k10-grafana-6d745654f5-4tn4x 1/1 Running 0 8h

kanister-svc-6696987d75-fphts 1/1 Running 0 8h

logging-svc-76bd7c976-cj9zz 1/1 Running 0 8h

metering-svc-554789c888-788mk 1/1 Running 0 8h

prometheus-server-bc497c594-hjv86 2/2 Running 0 8h

state-svc-cb8ddd78b-rklm8 2/2 Running 0 8h

kubectl get pod catalog-svc-7bd57d5f-pjs5f -n kasten-io -o yaml | grep images

kubectl get pod controllermanager-svc-7bc6ff9bc-zzl6j -n kasten-io -o yaml | grep image:

kubectl get pod crypto-svc-674465d56d-cf4bt -n kasten-io -o yaml | grep image:

kubectl get pod state-svc-cb8ddd78b-rklm8 -n kasten-io -o yaml | grep image: |sort|uniq -c

kubectl get pod catalog-svc-7bd57d5f-pjs5f -n kasten-io -o yaml | grep image:

kubectl get pod catalog-svc-7bd57d5f-pjs5f -n kasten-io -o yaml | grep image:

kubectl get pod catalog-svc-7bd57d5f-pjs5f -n kasten-io -o yaml | grep image:

kubectl get pod catalog-svc-7bd57d5f-pjs5f -n kasten-io -o yaml | grep image:

kubectl get pod catalog-svc-7bd57d5f-pjs5f -n kasten-io -o yaml | grep image:

kubectl get pod catalog-svc-7bd57d5f-pjs5f -n kasten-io -o yaml | grep image:

kubectl get pod catalog-svc-7bd57d5f-pjs5f -n kasten-io -o yaml | grep image:

kubectl get pod catalog-svc-7bd57d5f-pjs5f -n kasten-io -o yaml | grep image:

kubectl get pod catalog-svc-7bd57d5f-pjs5f -n kasten-io -o yaml | grep image:

kubectl get pod catalog-svc-7bd57d5f-pjs5f -n kasten-io -o yaml | grep image:

kubectl get po -n kasten-io | awk 'NR>1 {print $1}' |xargs -I{} kubectl get pod {} -n kasten-io -o yaml | grep image: |sort|uniq -c

'{}'